The internet is an incredible tool that has revolutionized the way we live, including how we work, learn, socialize, consume entertainment, shop, and more. It provides a virtually endless source of information and has completely changed the way we communicate. Although it now plays a significant role in our daily lives, the internet is a relatively new invention. Most people over the age of 35, or even 30, will remember a time when the internet didn’t feature in everyday life.

The invention of the internet didn’t happen overnight, and like most major breakthroughs, it was developed as a sequence of expansions on an original idea.

In this article, we explore the origins of the internet and the key players involved. Let’s take a step back in time and see how the world wide web came to be.

Early work by the ARPA

We have to go back to the moon race to understand how the internet first started to percolate in the minds of computer scientists and engineers.

It was the year 1957 when the Soviet Union launched its satellite Sputnik. The event caught US strategy and military planners completely off guard—they had no idea that their cold war adversary possessed this level of sophisticated communications and space navigation equipment.

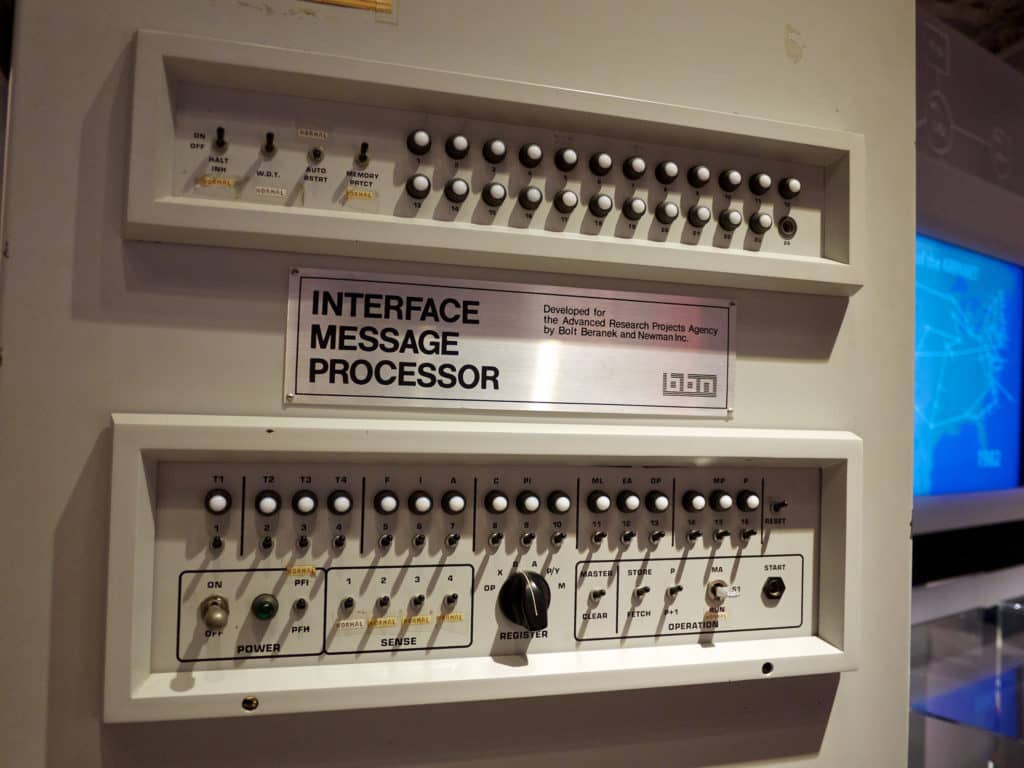

The US government immediately sprang into action. President Dwight D. Eisenhower created the Advanced Research Projects Agency (ARPA) with the explicit aim of regaining technological superiority in arms and space exploration.

ARPA was funded by the US Department of Defense, hence the common perception that the internet was created by the Pentagon. It was, in fact, largely an independent body that had the funds and mandate to do what it took to succeed.

It did exactly that, directly employing hundreds of scientists and subcontracting several more. ARPA worked with professors in MIT, Stanford, and Berkeley to initially develop advanced technology for space, ballistic missiles, and nuclear testing. A focus on communication via computers came later, after the organization understood that it would be easier to protect data transfer and information by breaking it up into tiny pieces and reassembling it at the other end. That’s kind of what the internet does today.

The “Galactic Network” concept

The theory of a global interlocking network of computers was first put forward by MIT scientist John Licklider, who spoke about a “Galactic Network” concept. Under this vision, computers across the globe would be connected to enable quick and seamless data transfer. This would be accessible to everyone, allowing for instant communication and access to an infinite amount of information.

John Licklider joined ARPA in 1962 to head the project. His colleagues Leonard Kleinrock and Lawrence Roberts came up with the theory of packet transfer, whereby messages are broken up into ‘packets’, sent separately to their destination, and then deciphered at the other end. This was far more secure than sending messages via a solitary line. The complexity of the transfer meant that eavesdropping is difficult, and one intercepted message barely gives away any discernible information.

Development of a wide area network

The first result of this experiment came in 1965 when ARPA connected a computer in Massachusetts with another in California via conventional telephone lines. The outcome was mixed—it confirmed the viability of packet switching theory and data transfer via a wide area network, but not everything went according to plan. This indicated that circuit switching via telephone lines is inadequate.

At the same time, the US Department of Defense also contracted the RAND Corporation to conduct a study on how it could maintain its command and control facilities in the case of a devastating nuclear attack. The RAND Corporation began work on a decentralized facility that could not be rendered invalid in such a scenario—with the outcome that the military would still have control over its own nuclear assets for a second strike capability.

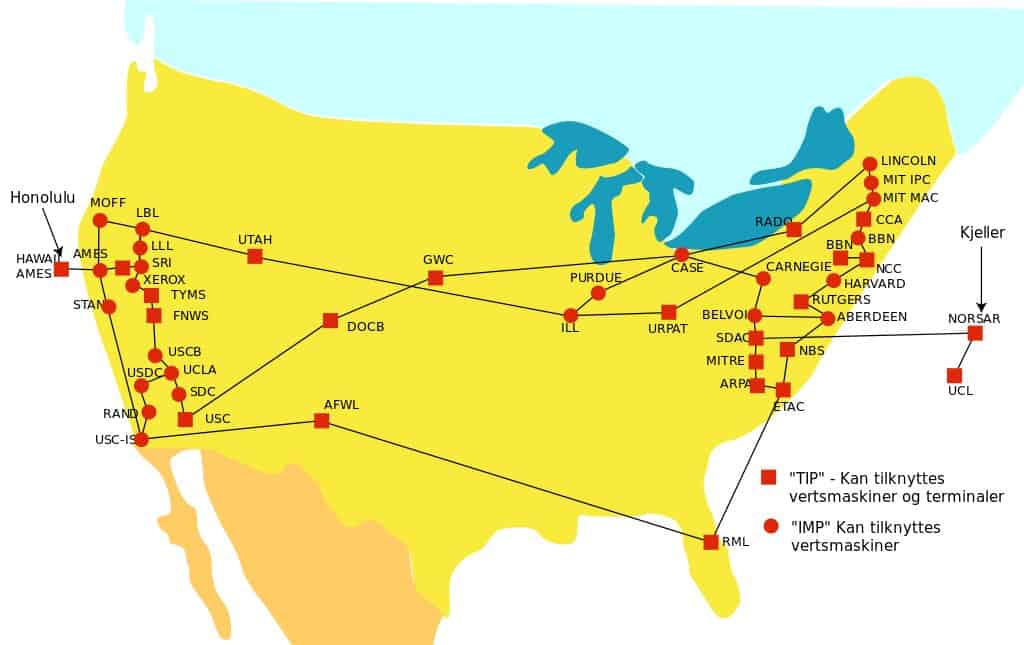

By the late 1960s, both the RAND Corporation and ARPA’s researchers came to the same conclusion independently of each other: the best way forward was to develop a wide area network that had the range and connectivity to prevent subterfuge. Their recommendations were incorporated into a computer network system called ARPANET.

In the initial phase, ARPANET linked computers installed at both UCLA and Stanford. Students at UCLA logged in to Stanford’s network and accessed its database. After successful testing, the system gradually expanded to host a total of 23 computers across California, and the number was almost 50 by 1974.

The first public demonstration of this new technology came during the International Computer Communication Conference in October 1972. At the same time, a version of email was introduced—written by Ray Tomlinson to facilitate easy communication for ARPANET developers. This program was further refined to list, archive, forward, and respond to messages.

Progression to multiple networks

In 1973, work started on a much more ambitious program: to develop internetworking or connecting multiple networks. This was different to the system architecture of ARPANET which was a closed network and didn’t allow computers from different networks to communicate with it.

The idea was to facilitate an open architecture that wouldn’t be restricted by geography or specific protocols. This would later come to be known as the Transmission Control Protocol/Internet Protocol (TCP/IP). The utility of TCP/IP is that it allows each individual network to operate independently—if one network was brought down it wouldn’t affect the stability of the entire web.

TCP/IP involved no overall global watchdog or manager. Networks were connected together via routers and gateways. And it was from the initial code of the TCP/IP protocol that the internet would later emerge. The key underlying idea of the internet was that of open-architecture networking. That’s what set it apart from earlier versions like ARPANET which were critical in validating things like packet switching, but weren’t designed to be as inclusive as the internet we see today.

Here are some of the salient features of the TCP/IP protocol that we see embedded on the internet:

- Each network should be able to work on its own and require no modifications to connect to the internet.

- Within each network there would be a gateway to connect it to the outside world.

- The gateway could not possibly restrict the information passing through it.

- Information would be transmitted via the fastest possible route.

- If one computer was blocked, then it would search for alternatives.

- The gateways would always remain open and they would route the traffic without prejudice.

- All development would be open source and design information would be freely available to all.

Early renditions of this idea involved a packet radio system that used satellite networks and ground-based radio networks to maintain effective communication. In 1975, researcher Robert Metcalfe developed a system that replaced radio transmission of data with a cable that had the capability to provide a much larger amount of bandwidth.

Metcalfe called it the Alto Aloha network and it laid the foundations for what later would be known as the ethernet cable. The system was a significant upgrade as it allowed the transfer of millions of bits of data per second in comparison to the thousands of bits via radio channels.

Related: Internet speeds explained

Personal computers and the information superhighway

Until the mid-1970s there weren’t any computers available for personal use. The internet was designed with the view that it would encapsulate networks maintained on large mainframe computers—usually owned by large corporations, governments, and academic institutions.

TCP/IP was incorporated during this time but it took several years of painstaking research and fine-tuning before it was able to serve the needs of the globe.

The Altair 8800, widely believed to be the world’s first personal computer, was introduced by designer Ed Roberts as a portable kit. It featured a whopping 64 KB of RAM. Apple was founded the next year (in 1976) and its first personal computer, the Apple II, was released in 1978. Computers were now slowly becoming part of the mainstream and finding a way into people’s homes.

In 1979, researchers Tom Truscott and Jim Ellis developed USENET based on UNIX system architecture that allowed files to be transferred between computers on the same network. It was widely believed to be the first rendition of internet forums and discussion groups. In its first iteration, USENET allowed people to transfer electronic newsletters between Duke University and the University of North Carolina. The name is derived from ‘users network’.

Early versions of USENET only allowed for one or two file transfers every day. But discussions could be threaded via topics and the system didn’t need a centralized database to function. The program gave birth to the concept of the free flow of information, later known as the ‘information superhighway’.

The internet takes off

It was in the early 1980s that the commercial version of the internet that we see today really started to take shape. ARPANET was in the process of switching to inclusive TCP/IP protocols but still lacked the backbone of the entire system—meaning it wasn’t capable of morphing into the global interlocking behemoth that the internet has become today. It needed an upgrade.

The possibility of switching over to connecting to the internet via traditional dial-up phone lines came during a meeting of computer scientists in 1981. The inadequacies of radio or satellite connections were discussed and the aim was to expand access to ARPANET.

Email was a powerful driver of early innovation in the internet. Its rapid popularity began to choke the system. Users were constantly sending messages back and forth and researchers understood this behavior needed to be encouraged. However, in order to do that, the system needed to be expanded and upgraded to the point that geography would no longer be an issue.

Funding for this idea came from the National Science Foundation in 1982. Computer scientists built Telenet—the world’s first commercial service—and allowed access to it via a complementary solution called PhoneNet. This resulted in a faster, cheaper way of connecting to the internet and allowed for email communication between the US, Germany, France, Japan, Korea, Finland, Sweden, Australia, Israel, and the UK. Development of the web started to accelerate.

The DNS system is born

The concept of Domain Name Servers (DNS) came about in 1984 (the same year as George Orwell’s novel). Before DNS, each host computer on a network was simply assigned a name. These names were added to a centralized database that was easily accessible by all but didn’t have the functionality to tier content according to purpose such as education, healthcare, or news.

The DNS system attached a suffix to each host computer and gave birth to tiered web addresses we see today such as .edu, .gov, and .org. It could also bifurcate locations by country.

At the same time, Western governments began to see the internet for what it is today—a powerful medium for the dissemination of information—and started to encourage its use in areas like education and healthcare. The British government announced JANET (Joint Academic Network)—a centralized system to facilitate information sharing between universities in the UK.

The US wasn’t far behind—the National Science Foundation established NSFNet to foster collaboration in research and design within higher education across America.

NSFNet had the mandate to work on federal policy initiatives as well. Its most crucial breakthrough was the agreement to provide the backbone for the internet in the US. It did so by plugging in five supercomputers, immediately expanding the capacity of the system and leading to a surge in use. For the first time matters of national security and defense were excluded, marking the final tilt of the internet away from military purposes purely to research, education, and development.

NSFNet’s new backbone resulted in a dramatic surge of computers on the network. In 1986, there were only 5,000 hosts. The number rose to 28,000 just a year later. By deliberately excluding government and commercial entities, the new infrastructure was now exclusively available for research, news, education, and entertainment. This further encouraged private companies to step in and start laying the foundations for internet connectivity in US households.

Technological advances boost growth

ARPANET was formally disbanded in the early 1990s as both personal computers and the internet started to become ubiquitous throughout the US. The infrastructure of this early precursor to the internet was unwieldy and unsuitable for mass public use, hence the decision.

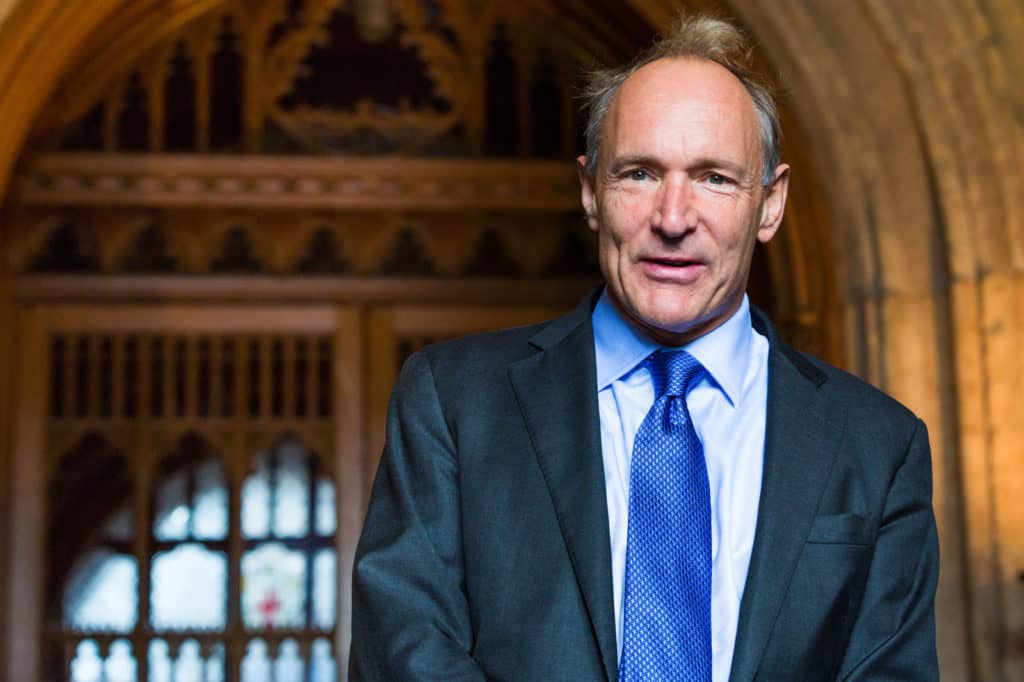

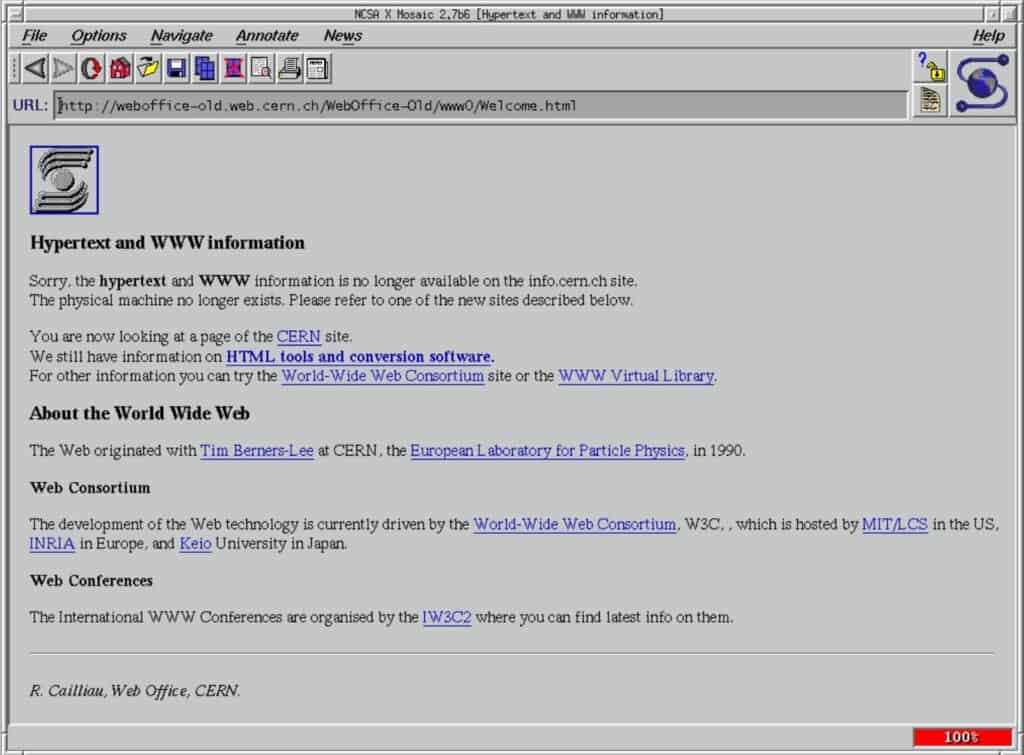

The development of the World Wide Web that we see today was accelerated by scientists at the European Organization for Nuclear Research (CERN) who brought into existence hypertext markup language (HTML). They also established the uniform resource locator (URL) as a standard address format that recognizes both the host computer and information sought.

Tim Berners-Lee, who is widely credited as the inventor of the World Wide Web, was an independent contractor at CERN working on this specific project. He wrote the proposal to link hypertext with TCP/IP and domain name systems. The proposal was accepted by his manager, Mike Sendall, and the rest, as they say, is history.

Both URL and HTML proved to be fundamental to the explosive growth of the web. HTML became the standard language for creating websites and web applications while a URL makes it much easier for users and networks to interact across the internet. It also allows for caching, storing, and indexing web pages.

It came as little surprise that Archie, the world’s first search engine, was developed the same year. The code for this program was written by Alan Emtage and Bill Heelan, both associated with McGill University. Its first version was simply a way for people to log in and search collected information via the Telnet protocol. Web crawlers at the time were not terribly efficient.

The internet becomes increasingly accessible

In 1991, Al Gore helped pass the High Performance Computing Act propelling the former US vice president into internet superstardom. Al had retained a keen interest in computing since his early years as a Congressman in the 70s. The passage of this law created a US$600 million research fund for high performance computing which resulted in the National Research and Educational Network (NREN) and National Information Infrastructure (NII). The latter was popularized by the term ‘information superhighway’.

Some of the most important features of the act were the promise to build widespread internet infrastructure and make it accessible to all. Federal agencies were required to build websites and make information public by putting it on the web, and a pledge was made to connect all US classrooms to the internet by the year 2000.

The year 1993 saw the public appearance of the world’s first graphical internet browser known as Mosaic. It was developed by Marc Andreessen who went on to establish Netscape Communications—known best for its wildly-successful Netscape Navigator line of browsers.

The proliferation of Netscape, HTML, and URLs was a triple whammy. Web browsers were now customizable and capable of displaying rich images directly on screens. Additionally, they came backed by 24-hour customer support. Trial versions of Mosaic were made available for free—this decision had several intended network effects and users couldn’t get enough.

By the time Netscape Navigator came online, there were already tens of thousands of users accessing Mosaic on their PCs. Netscape was a significant upgrade that allowed for faster browsing, boot times, and richer images.

Up until now, the internet had largely served the science and research communities by allowing for file transfers and democratizing access to information, as well as geeky early adopters who were fascinated with things like email and discussion forums. Graphic web browsers, search engines, HTML, and rapid growth of internet infrastructure changed the entire paradigm.

Another important development at the time was the invention of Javascript (not to be confused with Java) by Brendan Eich, which now, along with HTML and CSS, is ranked as one of the three core technologies powering the web and web applications.

Javascript was pushed by Netscape’s Marc Andreessen, who understood the key to the ubiquity of the web was to make it more dynamic and vivid. The language assisted web designers and programmers in assembling things like plugins, images, and videos directly into website source codes. Andreessen referred to it as a “glue language”.

Businesses arrive (and form) online

The web was now starting to seriously take off. Businesses were scrambling to get online, social networks and interest groups were forming, people scoured the internet for news, media, entertainment, gaming, and information. Personal computers had never been cheaper. In 1994, the first business plan for Amazon was written by Jeff Bezos.

Also in 1994, it was estimated that there were about 3.2 million host computers connected to the internet and approximately 3,000 websites. In two years that number grew to approximately 13 million host computers and roughly 2.5 million websites. The pace of growth was off the charts.

Bill Gates penned a famous memo in 1995 titled the “Coming Internet Tidal Wave”. It was a verbose and fairly prescient vision of the technology that was about to fundamentally change the way humans interact, engage, explore, and seek information.

Gates accurately predicted that the internet would significantly decrease costs of communication and that cheap, broadband connections in households would lead to increased demand for video and video-based applications. He pointed to the growth of Yahoo as an equalizer, noting that it was easier to search for information on the web than within Microsoft’s internal networks.

“The internet is the most important single development to come along since the IBM PC was introduced in 1981,” Gates exhorted.

1995 also came to be known as a seminal year in other aspects. It saw the launch of Windows 95 with the bundling of Internet Explorer for free—Microsoft’s first effort to challenge the dominance of Netscape. Amazon also officially launched this year as a bookstore, along with other early internet icons such as Yahoo and eBay.

Sun Microsystems programmed Java—allowing for animation on websites and a new level of internet interactivity. PHP, a server-side scripting language, was developed this year too. It assisted the development of HTML and introduced web template systems, content management systems, and frameworks.

Development of rules and regulations

But as the internet well and truly became a permanent part of people’s lives, the first signs of clamping down on freedom of expression and net neutrality started to manifest. The United States Congress introduced the Communications Decency Act—observers saw the bill for what it was: an attempt to censor free speech and information.

The official version behind the Communications Decency Act revolved around concerns stemming from hate speech and pornography that had started to permeate throughout the web. The US government explained it wanted to extend oversight on the internet similar to regulations applied on television and radio.

A rebuke to these laws was penned by John Perry Barlow—a founder of the Electronic Frontier Foundation and an impassioned advocate for the neutral, stateless internet. Barlow argued that the internet wasn’t designed to be subjected to a singular authority and that governments have no “moral right” to rule it.

He weaved the web (pun intended) of an alternative reality, a space where information cannot be suppressed and tyranny unheard of.

“We are creating a world that all may enter without privilege or prejudice accorded by race, economic power, military force, or station of birth. We are creating a world where anyone, anywhere may express his or her beliefs, no matter how singular, without fear of being coerced into silence or conformity,” affirmed John in his declaration of the independence of cyberspace.

Want to learn more? We have a dedicated guide to understanding online censorship and how to circumvent restrictions your government might have placed on you.

1998 was the year Sergey Brin and Larry Page co-found Google. Napster spread its wings in 1999—music and video streaming would never be the same again. It eventually shut down due to the combined effort of record labels and movie production houses but not before it introduced a completely new approach.

By now businesses were completely sold on the idea of an internet-only future. Adding a .com at the end increased the valuation of your company ten-fold. Venture capitalists didn’t help the issue either by backing each and every webstore or hare-brained idea. That eventually led to the dotcom crash of 2000.

But for the internet, there was just no looking back.

Web 2.0: the mobile revolution

The proliferation of mobile data networks helped bring vast swathes of the global population online for the first time. Internet service in the late 90s and early 2000s was prohibitively expensive and could only be accessed by clunky devices such as PCs and laptops—hence keeping it out of reach for most.

2G networks like GPRS and EDGE had been around since the mid-90s but the introduction of 3G in 2001 was a true gamechanger. It took a little while for the tech to spawn across the globe but the mass adoption of smartphones completely changed the paradigm. Starting with Blackberry, and then followed by the iPhone and Android OS, consumers now had a small portable device that could access the web, check email, and stay connected via social networking.

Consumers demanded snazzier websites and greater utility from the internet. Despite the dotcom crash of 2000, fundamentals remained unchanged: the web was a growing phenomenon and people were using it to buy things, search, and access information at an unprecedented rate.

Amazon, which had its IPO in 1997, and Yahoo, which IPO’d in 1996, were continuing to grow and raise the bar. The term “Web 2.0” first started making the rounds in the mid-2000s as websites shifted from static pages to dynamic HTML, high-speed internet started to become the norm, and user-generated content began to spread its wings.

This led to websites like YouTube and social networking apps like Orkut, Myspace, Facebook, and Twitter.

In 2011, more than 90 percent of the world’s population lived in areas with at least 2G coverage with 45 percent living in areas that had both 2G and 3G. The next year it was estimated that nearly 1.5 billion people accessed the web via mobile broadband networks, with the number expected to grow to 6.5 billion in 2018.

What will the future hold for the internet?

It’s hard to imagine life without the internet now. And moving forward, it’s likely that the world will become even more connected.

Data volumes are growing at the rate of 40 percent year-on-year according to the World Economic Forum, with some estimates suggesting that 90 percent of all data consumed took place in the last two years.

It’s predicted that the number of internet-connected devices such as smart TVs, cars, and industrial machines is likely to reach a staggering 75 billion by 2025. This means wifi networks will have to be upgraded accordingly—there’s already development of light fidelity (Li-Fi) tech which allows for data transfer of up to 224 gigabytes per second and could eventually replace wifi.

But at the same time there are challenges. More than one-third of the world’s population still doesn’t have access to the internet. The United Nations has included universal affordable internet access in its Global Goals—recognizing that it has the power to reduce poverty, advance healthcare and education, and build communities.