The bot problem

Facebook, along with most other social networks, has a bot problem.

From Facebook’s perspective, bots can be indistinguishable from legitimate users. These automated programs can be used to scrape users’ personal information without consent, fabricate influence campaigns, covertly push agendas, spread disinformation, and make scams more convincing.

While automated systems can detect more glaring bot activity, more sophisticated bots can mimic human input so accurately that Facebook can struggle to tell the difference.

As a result of its failure to stop bots on its platform, it appears Facebook is instead trying to normalize the idea that bots are just a part of life on the internet. Bots won’t be stopped any time soon.

Inside the bot farm

Comparitech researchers, led by Bob Diachenko, recently stumbled upon a Facebook bot farm hosted on an unsecured server. We found the bot farm as part of our routine scans for vulnerable databases on the internet. Without authentication necessary to access the bot farm, we took a peek under the hood to see how it works.

The bot farm we found was used to create and manage 13,775 unique Facebook accounts. They each posted 15 times per month on average, for a total of 206,625 posts from this one farm in a given month. Note that new bots are being created by bot farms and being taken down by Facebook’s moderation systems all the time, so the total figures could vary quite a bit month-to-month. The earliest post from these accounts was created in October 2020.

Researchers say Facebook only blocked about one in 10 of the farm’s bot accounts as of time of publishing.

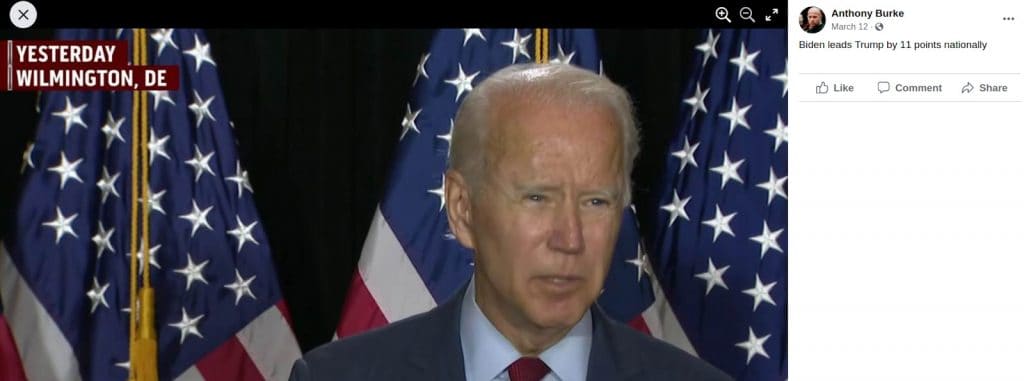

The other accounts are active, see below for a screenshot taken May 10th showing a post was made 17 hours ago:

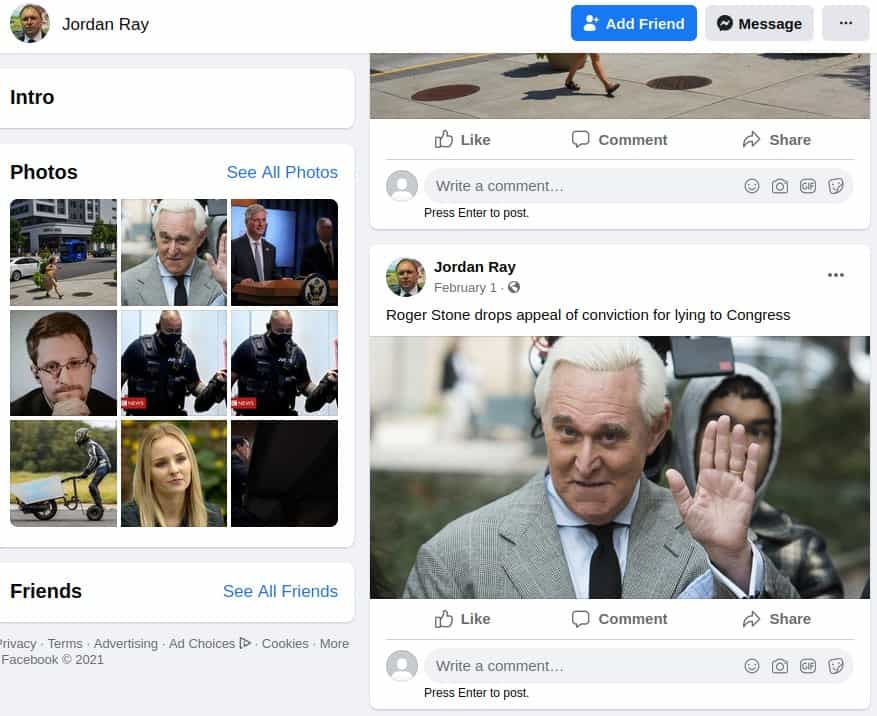

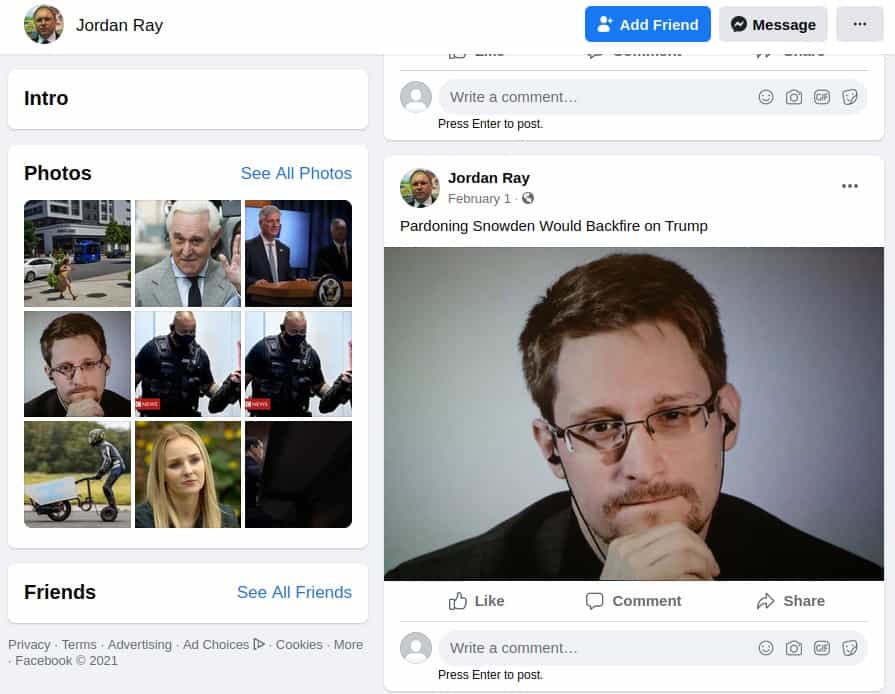

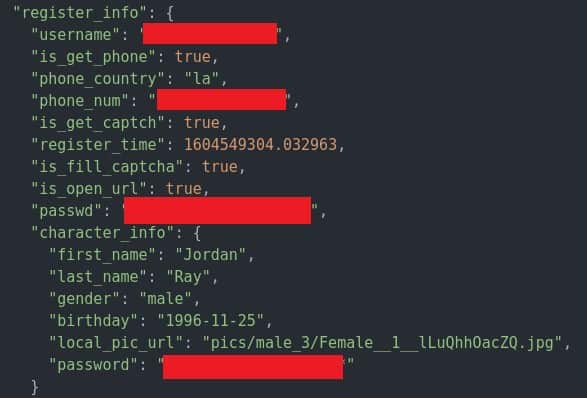

These bots appear to be used for political manipulation. They post provocative and divisive political content to incite legitimate Facebook users. Each account looks like a real person at first glance, complete with a profile photo and friends list (likely consisting of other bots). To expand their reach and ensure they’re not just posting to each other’s timelines, the bots join specific Facebook groups where their posts are more likely to be seen and discussed by legitimate users.

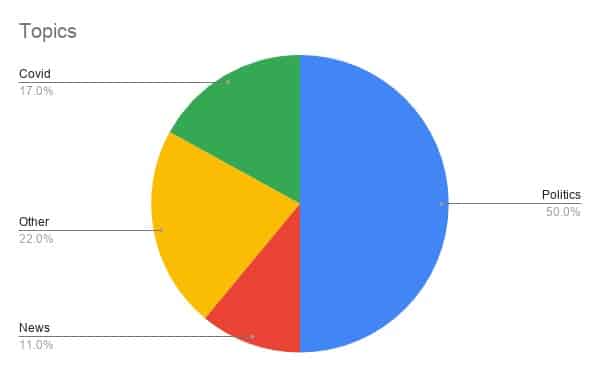

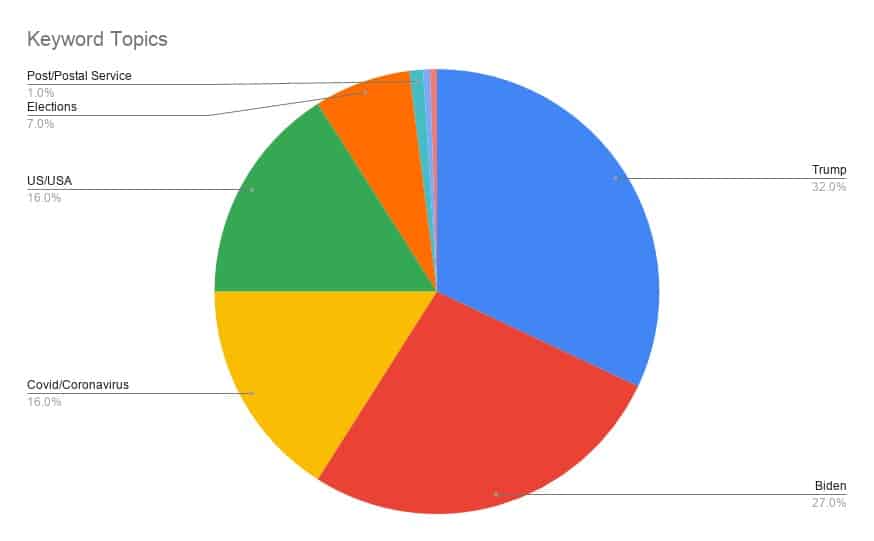

About 50 percent of the posts were about political topics. News incidents and pandemic-related posts were also common.

“Trump” was the most-used keyword in bot posts, followed by “Biden”. Some specific events discussed include the 2020 US Presidential elections, California wildfires, protests in Belarus, US border restrictions, the COVID-19 pandemic, and a recent shooting in San Antonio.

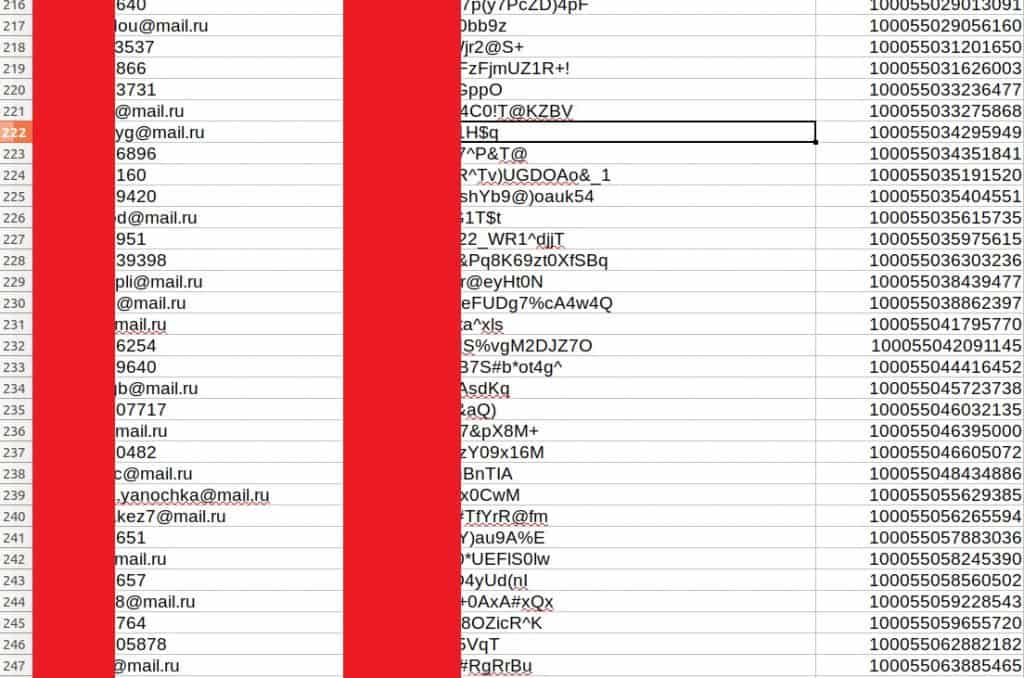

Most of the bot accounts were registered with temporary phone numbers and @mail.ru email addresses. Due to lack of authorization needed to access the bot farm’s backend, Comparitech researchers were able to collect username and password pairs for each bot account.

Comparitech reported the bot accounts to Facebook but has not received a response as of time of publishing.

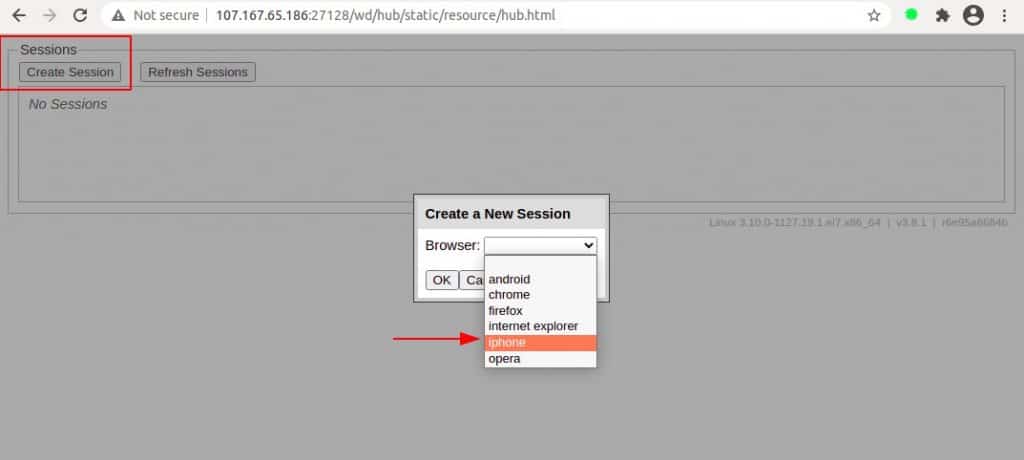

Selenium used to imitate human input

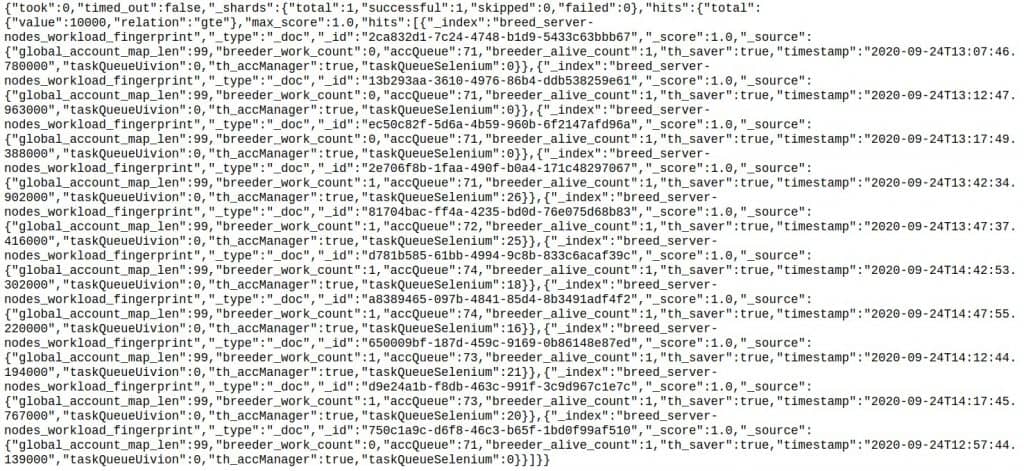

A key tool used by the bot farm to imitate human behavior is called Selenium.

Selenium is a multifunctional toolset that, in this case, simulates the activity of a real user. Bots controlled through Selenium can open and navigate web pages in a normal web browser, click buttons and links, enter text, and upload images. The bots we uncovered made posts with text and images, reposted articles from news outlets, and joined popular groups in various categories (music, TV shows, movies, etc).

Selenium can be used to control an army of bots, tasking them with joining groups and creating posts. Researchers found bot sessions can emulate a range of user agents, from iPhones to Chrome browsers, so the owner can make traffic appear to come from a broad range of devices. Selenium can be used through proxies, further allowing bots to mask their source. Selenium can even be set up to add a delay between clicks, so it doesn’t appear to navigate pages faster than a normal human. Researchers say even some of the most advanced bot detection techniques cannot distinguish between a human and Selenium.

What remains unclear is where the bots get their information and images from. Researchers could not find any crawlers that gather the images posted by bots, so we assume the attackers accumulate the content from a private source.

Although this particular bot farm wasn’t well-secured, most are much more difficult for unauthorized users to find and access.

Why bots?

So what’s the purpose of all this bot farming? They could be utilized for a variety of purposes. Whomever runs the bot farm can use it for their own purposes or rent it out to third-parties for a fee.

Bots play a huge role in influence campaigns. State-sponsored influence campaigns from Russia received a lot of attention during the last two US presidential election cycles. They likely used bot farms like this one to spread disinformation and incite Facebook users.

Bots can be used to artificially inflate the public’s perceived enthusiasm for a certain cause, person, product, or viewpoint. Astroturfing, for example, masks the real sponsors of a message to make it appear as though it originates from and is supported by grassroots participants. If people think bots are human, they are more likely to believe that the message has popular support.

In the same vein, bots can be used to artificially boost subscriber or follower numbers. The bot farm we examined subscribed accounts to certain groups. To real users, a page or group with 1,000 members seems more legitimate than a page with a dozen members. This can be used to lure in victims for some sort of scam.

Lastly, though least likely, is that Facebook takes advantage of bots to inflate its own user numbers and user activity, perhaps to please stakeholders who demand quarter-on-quarter user growth.

Bear in mind that bots are not necessarily malicious all of the time. They might be configured to post benign content until the bot farm administrator decides to weaponize them in an attack.