You can have the best cryptographic systems in the world in place, but your setup may still be vulnerable to side-channel attacks. This is because side-channel attacks don’t target weaknesses in the cryptosystems themselves.

Instead, attackers observe the implementation of the system, looking for information leaks that may tell them something about the cryptographic system’s operation. In certain situations, they can exploit this information to deduce the keying material, ultimately allowing them to break the system.

Side-channel attacks are based on the fact that when cryptosystems operate, they cause physical effects, and the information from these effects can provide clues about the system. Some of the physical effects include:

- The amount of power an operation consumes

- The amount of time a process takes

- The sound an operation emits

- The electromagnetic radiation leaked by an operation

These effects can leave behind clues that can help an attacker deduce important information. This information can lead them to figure out the cryptographic keys that secure the data, reveal partial state information, expose parts or all of the ciphertext as plaintext, and ultimately lead to the compromise of the system.

This means that when implementations leak sufficient amounts of side-channel information, the system becomes insecure. The concept of side-channel attacks can be pretty hard to get your head around, so let’s begin with an analogy to help illustrate the concept.

The safe-cracker

Let’s say the world’s most valuable diamond is kept in a safe at a secure facility, but a daring thief wants to claim it for themselves. After months of scoping out the defenses that are in place, the thief has figured out that they can get to the room that houses the safe, but only for five minutes when the guard changes shift.

The problem is that the safe has 10,000 possible combinations, and the safe-cracker has no clue what the correct code may be. Given the impossibility of getting in, trying the roughly 5,000 combinations the average attempt would take, and getting out before the next guard shows up, we could consider the diamond secure against theft.

However, our thief is a master lock manipulator, and as they turn the dial, they can feel the extremely subtle imperfections of the lock, giving hints as of the true combination. With just a couple of minutes of careful concentration and years of experience, the thief finds the combination, the safe clicks open, and the diamond is in their hands. They have just enough time for a speedy getaway.

(Before any lockpickers turn nitpickers, we know that this is the Hollywood version of how lock manipulation works and that, in reality, it is a much slower process. However, the Hollywood analogy still helps to give a good indication of how side-channel attacks work. While lock manipulation is certainly more tedious in real life, so are side-channel attacks).

The thief just pulled off the perfect side-channel attack. The lock stayed strong and was never broken, so we can’t necessarily say the vulnerability was down to a design flaw in the lock itself. However, because the manufacturing process left behind tiny imperfections that gave clues about the combination, we could say that the real weakness was its implementation.

If the lock had been made more carefully, the thief wouldn’t have been able to feel the imperfections and use them to figure out the combination. Instead, they would have had to go the old brute-force route, which never would have worked given the time limit of our situation. So while the security setup was secure against brute-forcing, it was not secure against side-channel attacks.

It’s the same when it comes to computer systems. If they aren’t implemented carefully, the security algorithms and other aspects may give away tiny pieces of information, like the feeling from the imperfections of the lock. Attackers can then use this information to break the system.

Of course, perfection doesn’t really exist, and there is always a way to get around any security system, whether it’s a physical safe or a cryptosystem that helps to encrypt data. The aim of security in either space isn’t to make something impenetrable, but just to make it too expensive or time-consuming to bother attacking.

Side-channel attacks are simply another tool in the attacker’s arsenal. If an attacker can’t find a practical way to break the algorithm itself, then they can collect information from its implementation and see if this gets them any closer to breaking the system.

We need to take side-channel attacks into consideration whenever we try to secure a system. If we spend all of our effort in setting up the most elaborate cryptosystem without accounting for any side-channel weaknesses, we may very well end up with our defenses breached.

The history of side-channel attacks

One of the earliest examples we have of a side-channel attack comes from World War II. At the time, some communications were being encrypted by Bell 131-B2 mixing devices. A researcher from Bell Labs noticed that whenever the machine stepped (caused one of the other rotors to rotate), an electromagnetic spike could be seen via an oscilloscope, even at a distant part of the lab. Upon further examination, he found that he could use these spikes to recover the plaintext from the encrypted data.

The Bell 131B2 mixer, from which Bell researchers first discovered electromagnetic side-channel attacks. TTY mixer 131B2 by the Department of the Army Technical Manual licensed under CC0.

Bell reported the issue to the Signal Corps, but was allegedly dismissed at first. In a bid to prove the severity of the issue, they placed engineers in a building across the street from the Signal Corps’ cryptocenter. From 80 feet away, they recorded the encrypted signals from the cryptocenter for an hour. Just a few hours later, they had pieced together 75 percent of the plaintext from these encrypted transmissions.

This showed just how grave the threat was, and Bell Labs spent the next six months investigating the issue, as well as how it could be mitigated. Eventually, its engineers came to three potential approaches, which are still used today to counter side-channel attacks:

- Shielding – In this case, blocking the radiation and magnetic fields that were being emitted.

- Filtering – Adding filters that stop the electromagnetic radiation.

- Masking – Covering up the signals with other transmissions.

The company came up with a modified design for the machine, but the shielding and other fixes caused operational issues, and every existing machine would have needed to be sent back to Bell Labs for retrofitting. Instead of adopting the modified version, the Signal Corps warned commanders to secure a diameter of 100 feet around their communications centers, in order to prevent interception using the recently discovered techniques.

The problem was essentially forgotten about until the mid-fifties when the CIA rediscovered it. This time, the solution was to secure a zone of 200 feet in all directions. Another option was to run multiple devices at once to mask the signals.

In the following years, more countermeasures were attempted, but new threats were also discovered. Researchers found that the voltage fluctuations, acoustic emanations and vibrations could also reveal information that could be used to decrypt information. These attacks were given the code name TEMPEST by the intelligence community.

Over time, a range of classified TEMPEST standards were developed, with the aim of defining a set of rules to reduce the risks from side-channel attacks.

The first unclassified publication of these threats came from Wim van Eck in 1985, which led to these eavesdropping techniques being dubbed van Eck phreaking. While the intelligence community already knew of these dangers, it was thought that the attacks were only within the reach of nation-states.

van Eck accomplished his eavesdropping with just $15 worth of materials and a TV, proving that the attack was well within the reach of other parties. Marus Kuhn published the details of a number of other low-cost monitoring techniques in 2003.

During the same period that the TEMPEST attacks were being studied, the UK’s MI5 was also making early forays into the realm of side-channel attacks. In his memoir, MI5 scientist Peter Wright details the agency’s attempts to decipher Egyptian codes during the Suez Crisis.

MI5 installed microphones near the cipher machine in the Egyptian embassy, and every morning, they would listen to the sounds of the machine being reset. These noises gave them clues about the new settings, which they could then use to decipher the coded messages. This type of side-channel attack became known as acoustic cryptanalysis.

In the nineties, Paul Kocher pushed the field of side-channel attacks even further. His 1996 publication showed timing attacks that could break implementations of DSA, RSA and Diffie-Hellman. He was also part of a team that developed differential power analysis, another type of side-channel attack. In addition to pioneering many of these attacks, he also played a key role in coming up with a range of countermeasures.

What side-channel attacks are not

Side-channel attacks are not to be confused with brute-forcing, which essentially involves trying every possible password combination in the hopes of eventually landing on the right one, then using the correctly guessed password to enter the system. Brute-forcing is not considered a side-channel attack, because it doesn’t rely on information emitted by the cryptosystem.

However, if a cryptosystem leaked timing information (or other side-channel clues) that an attacker could use to deduce part of the key, they could use this side-channel information to significantly speed up the brute-forcing process.

Social engineering is also not considered a side-channel attack, because it involves manipulating a person into handing over sensitive data, rather than leaked information from the cryptosystem itself. Likewise, rubber-hose cryptanalysis is not considered a side-channel attack either, because it involves using torture or other types of coercion to force people to reveal their passwords, instead of relying on the secrets that are inadvertently revealed by the system.

Examples of side-channel attacks

There are many different types of side-channel attack, differentiated by the type of information they use to uncover the secrets of the cryptosystem. These include:

Acoustic cryptanalysis

Cryptosystems emit sounds when they perform operations, and these sounds are often correlated with the operation. Acoustic cryptanalysis involves monitoring and recording these sounds, and using them in an attempt to deduce information about the cryptosystem. This can ultimately result in an attacker obtaining the keys and compromising the system.

One example is the MI5 attack against the Egyptian embassy during the Suez crisis, which we mention in The history of side-channel attacks section. While early attacks focused on printers and encryption machines, a 2004 paper from researchers at the IBM Almaden Research Center showed that the sounds from ATM pads, telephone number pads, notebook keyboards and PC keyboards could also be exploited.

In the paper, the researchers monitored computer keyboards with a parabolic microphone, under the hypothesis that each key would make slightly different sounds. Even though the keys sound the same to the human ear, the paper describes how the academics trained a neural network to differentiate between the sounds of each one (with a 79 percent success rate).

The obvious conclusion from this research is that an attacker could use the techniques outlined in the paper to gain a user’s login credentials. However, they would need to be in the vicinity of the target. One possible mitigation strategy proposed by the researchers was to use touchscreen or rubber keyboards instead.

The researchers repeated the experiment on a notebook keyboard, an ATM pin pad, and a telephone pad. The telephone pad and ATM pin pad were especially vulnerable, with 100 percent success rates. This indicates a huge risk when it comes to people’s pin numbers.

In the following year, researchers from the University of California performed a similar experiment that reinforced the viability of these attacks.

Acoustic cryptanalysis against CPU operations and memory access

In 2004, Adi Shamir (of RSA fame) and Eran Tromer presented their research on acoustic crypanalysis at Eurocrypt. They tested a range of laptops and desktops, finding that on some computers, it was possible to distinguish patterns of memory access and CPU operations, via the sounds that were being emitted.

They showed how RSA decryption and signature processes gave off varying sounds for different secret keys (note that in this situation, we are referring to cryptographic keys, which are kind of like passwords, and not to keyboard keys, as in the previous example).

The sounds also revealed timing information about the operations, which could be used in timing attacks, which we discuss below. They found that these sounds could ultimately be used to gain a greater understanding of the cryptographic processes, and may ultimately lead to the discovery of the secret keys.

The researchers proposed dampening the sound as a countermeasure. One option is to use sound-proof boxes. Another method is to mask the acoustic signals with other noise that doesn’t correlate with the cryptographic operations. They also proposed designing the system with components that make less sound.

In 2013, Shamir, Eran and another researcher named Daniel Genkin published an additional paper on acoustic cryptanalysis. In this instance, they demonstrated how RSA keys could be extracted from GnuPG via low-bandwidth acoustic cryptanalysis.

The attack was tested on various laptops and was able to extract full 4096-bit RSA keys within an hour, relying solely on the sound produced by the computer during the decryption of chosen ciphertexts. In the lab, they showed that the attack could be successful using only a mobile phone placed next to the laptop, or a more sensitive microphone placed at a distance of four meters.

The team proposed similar countermeasures as those in their previous paper, as well as some additions. These included:

- Masking the leakage with noise – They specified a “carefully designed” acoustic noise generator, because noises at other frequencies can be filtered out.

- Acoustic shielding – The researchers suggested that sound-proof enclosures and acoustic absorbers would reduce the signal-to-noise ratio, making the attacks less feasible.

- Ciphertext randomization – One of the most effective mitigation strategies involves adding in random values to the decryption process, via a specific formula.

- Ciphertext normalization – This involves padding the ciphertext with information that ends up eliminating some of the crucial information leakage.

- Modulus randomization – Randomizing the modulation during each exponentiation can help to defend against an attacker being able to distinguish the keys and extract them.

Timing attacks

Timing attacks involve measuring how long it takes for a cryptosystem to perform certain operations. Computer operations take time to execute, and this amount of time can vary based on the input. These differences can be caused by processor instructions that run in non-fixed time, branching and conditional statements, performance optimizations, RAM cache hits, and for many other reasons.

If an attacker can precisely measure the length of time that each cryptosystem operation takes, they can use this information to work toward figuring out the inputs. While it may seem like timing information leaks would only reveal insignificant data, in certain situations, it can be enough for the attacker to uncover the key and the original plaintext.

The following factors can impact how useful timing information may be to an attacker:

- The CPU that runs the system.

- The design of the cryptographic system and the algorithms it uses.

- The way the system has been implemented.

- Any timing attack countermeasures that may be in place.

The more accurately an attacker can measure the timing of various operations, the more useful the information can be.

Timing attacks are demonstrated in Paul Kocher’s Timing Attacks on Implementations of Diffie-Hellman, RSA, DSS and Other Systems paper. In the paper, Kocher showed that under certain situations, an attacker may be able to break RSA, DSS, Diffie-Hellman and other cryptosystems.

To do so, the attacker would need to be able to passively eavesdrop on an interactive protocol while the victim was performing the same operation several times, with a number of different values. If the attacker listened to timing variations caused by certain aspects of the cryptosystem, it could give them the information they needed to compromise the key.

To mitigate the attack, Kocher proposed making the software run in fixed time, although he admitted the difficulties of doing this. As an alternative, he proposed adapting blinding signature techniques that would prevent attackers from being able to see the modular exponentiation function’s inputs. This involves updating important inputs before each modular exponentiation step, preventing attackers from gaining useful knowledge about the input to the modular exponentiation, and ultimately preventing the timing attack (although there is a theoretical caveat, which we won’t delve into).

A 2003 paper called Remote Timing Attacks are Practical furthered Kocher’s work, and showed how timing attacks could also be used against general software systems. The paper was significant, because it showed that these attacks were practical against stronger devices. Prior to the paper’s publication, Kocher’s attack had only been applied against security tokens.

In the 2003 paper, the researchers used a timing attack to extract private keys from an OpenSSL-based web server that ran on a machine in the local network. They also extracted secret keys from a separate process running on the same computer, and through a virtual machine.

The researchers concluded their paper by putting forward three separate defenses. The first and preferred method was through RSA blinding by adding random inputs. This prevents the timing of the decryption process from revealing the key.

Another option was to stop RSA decryptions from being dependent on the input ciphertext, although the researchers believed this was harder to achieve. The final proposal was to require all RSA computations to be quantized, meaning that each decryption would need to be computed in the length of time that the longest decryption takes. We cover this in more detail in the following section.

Mitigating timing attacks

Timing attacks can be mitigated with constant-time algorithms. They work under the theory that if the timing information from various operations gives clues that help an attacker break the system, then eliminating this useful information will help to keep the system secure.

Instead of operation x taking a different amount of time to execute than operations y or z, constant-time algorithms cause all algorithms to take exactly the same amount of time to execute. When there is no variance between operations x, y and z, attackers no longer have timing information that can give them inferences about the underlying system. This eliminates their ability to use timing information to break the system.

However, constant-time algorithms do have a downside, in that all executions must take as long as the worst-performing execution, which reduces the efficiency of the algorithm.

Another mitigation technique against timing attacks is masking. Masking involves splitting the secret into several shares, which the attacker would have to collect and reconstruct in order to obtain the shared secret.

However, masking isn’t practical in all algorithms, only those with a suitable algebraic structure. In viable situations where it requires random values, it can be difficult to source this randomness within embedded systems. In addition, there isn’t much conclusive proof that masking is effective in blocking timing attacks.

Meltdown and Spectre

At the start of January 2018, two severe vulnerabilities were published by researchers from Google and various academic institutions. The related vulnerabilities were named Meltdown and Spectre, and they affected some ARM-based processors, IBM Power processors and Intel x86 microprocessors. The wide deployment of these systems meant that a large portion of the world was affected by these vulnerabilities.

The Meltdown exploit is complicated, but one stage used a timing attack to exploit flaws in the design of the processors. Ultimately, it allowed attackers to read all memory, including sensitive information such as passwords.

Spectre affected microprocessors that perform branch prediction, which essentially involves the circuit trying to guess the direction of the execution sequence before it is known definitively. When most microprocessors make speculative executions that result in branch misprediction, the process can leave behind side-channel information that’s observable to attackers.

Timing these processes could reveal information about their inner workings, potentially allowing attackers to read sensitive data.

At the time of its publication, the Meltdown exploit affected a wide range of systems using the above-mentioned processors. This included all but the latest versions of Windows, macOS, iOS and Linux. It also impacted a large number of servers, cloud services, IoT devices and other technologies. The Spectre exploit affected a similarly widespread variety of systems.

Most major vendors released software patches to address the vulnerabilities ahead of their public disclosure. Some of these fixes may have negatively impacted system performance, or even caused unwanted reboots. Hardware and firmware fixes have also been released.

Cache-based side–channel attacks

Caches are either software or hardware mechanisms that store data to speed up future requests. They are often used for data that is expected to be frequently used, and help to improve efficiency. It’s much quicker for the system to retrieve data from the readily available cache, than from main memory, secondary storage, or a far-off server. Similarly, it can be quicker to retrieve data stored in cache than having to redo whatever processes were involved in computing it.

Caches are used in many areas of computing, such as hardware, software and networking Some of these include:

- GPU cache

- CPU cache

- Web cache

- Disk cache

A good example of the caching process is CPU caching. CPUs can reduce the average time and energy used to access certain data by storing it close to the processor core, in the CPU cache. When copies of data are stored on the much faster and closer CPU caches, they are retrieved more rapidly than when they are retrieved from other memory locations.

Most CPUs have a hierarchy of cache levels, with the lower levels having faster access times, but lower numbers of blocks and smaller block sizes, as well as fewer blocks in a set. Higher-level caches have progressively more blocks, larger block sizes, a greater number of blocks in each set, but longer access times. However, these higher-level caches still have much faster access times than main memory.

While this design of cache hierarchies results in much faster computations on average, there are also some side-channel attacks that can exploit it. Cache-related attacks have been investigated since the nineties, but these earlier attacks mainly involved hardware modifications and weren’t particularly feasible.

More recently discovered attacks operate purely in software, which opens up a world of opportunities to hackers. These include timing-based cache attacks that can be launched remotely by accessing the time gap between on-chip caches and main memory. If attackers can make their way into this part of a victim’s system, they can monitor the hit and miss traces from various access times.

Similar to the timing attacks in the previous section, this timing information from the on-chip caches and main memory can be used to infer sensitive information. In combination with knowledge of the cryptographic algorithm, an attacker could use these timing inferences to extract the secret keys, compromising the security mechanisms.

Intel processor data leakage

As specific examples of how these side-channel cache attacks can occur, researchers from several different institutions investigated the data leakage from Intel processors. They identified three different contexts where leakage could occur between virtual machines:

- When multithreading CPUs run threads from both the attacker and their potential victim on separate virtual machines but on the same physical core. Because the two virtual machines share the same core, the information in the victim’s level 1, level 2, and last level caches is vulnerable.

- During context switching between running processes, when two different virtual machines are on the same core. Preemptive scheduling allows two separate processes to run on the same core, sharing the cache. If the system uses the scheduling to switch between the victim’s virtual machine processes, it may leak the activity to the attacker. This affects information in all of the caches.

- In multi-core contexts where the last level cache is shared. Despite the processes running on different cores, the shared last-level cache still leaves a victim’s virtual machine vulnerable to attack. This setup makes it possible for attacker processes to manipulate the caches from the victim’s processes on a separate core. This vulnerability exists because of Intel’s inclusive architecture, which has caches that allow privileged instructions to take out cache lines, such as the victim’s processes from the shared last level cache.

Together, these three types of information leakage leave a range of opportunities for hackers to launch a variety of attacks. Some of these include:

Prime and Probe attack

The prime and probe attack is capable of exploiting level one cache data and last level cache data. It requires the attacker to have spying software that can observe the cache through one of the above-mentioned leak types where the two parties share resources.

The attacker begins with the prime step, where it fills the cache with its own code. It then goes into a rest state, allowing the victim to execute its own code. In the probe step, the attacker monitors its own filled cache while continuing to execute code normally. The attacker then measures how long it takes to load each set of data that it puts in the cache.

In some cases, the victim will evict the attacker’s cache sets, which results in the attacker’s fetching process taking longer. Alongside detailed knowledge of the algorithm, the attacker can use this timing information to estimate which cache lines were loaded by the victim. This information can be used by the attacker to help deduce the keys.

Evict and time attack

Under this technique, the attacker also needs spyware access. They begin by filling the cache with their own data, just like the prime step in the previous technique. Following this, the attacker lets the victim’s program execute its own code. Its access to memory evicts some of the cache lines that the attacker had primed.

The attacker then monitors the variations in the victim’s execution times. From the load time difference, the attacker can deduce which lines the victim accessed. This information can ultimately give an attacker the foothold they need to figure out the secret keys.

Flush and reload attack

The flush and reload technique is a trace-driven attack, which means that it attempts to manipulate the traces from a victim’s access. It relies on page sharing, where both the victim and the attacker share address space. The threat is due to inclusive caches in Intel x86 architecture, which provide privileged instructions for flushing memory lines from each level of the cache.

The attacker starts by flushing a shared cache line. It then idles, giving the victim time to execute and access the cache. The attacker then reloads the shared cache line and measures the time it takes for it to load.

If the victim accesses the shared cache line, the reload operation will only take a short time, because it will be available in the cache. If the reload takes significantly longer, it means that the line is being brought from memory instead and that the victim hasn’t accessed the cache line.

Because the attacker and the victim share the data in the cache, the attacker is allowed to hit on it. When the timing of the reload operation indicates that the victim has accessed the cache, the attacker can use this data to help it ascertain the shared keys.

Mitigating cache-based side-channel attacks

The above examples are just three of the many cache-based side-channel attacks. Countermeasures against the various cache-based side-channel attacks can be roughly split into three categories:

- Application-specific mitigation techniques

- Compiler-based mitigation techniques

- Redesigning hardware

Due to the complex nature of modern architecture, a given mitigation technique may not fully protect the system. Because of this, hardware and software developers need to consider the entirety of the threat model and choose mitigation techniques that can combat the specific threats faced.

Application-specific mitigation techniques

Some popular approaches to software-based mitigation involve disabling resource sharing, or isolating applications. This prevents attackers from being able to access the cache of a potential victim.

One specific method is page coloring, which is a technique that partitions the cache into cache sets at the granularity of page sizes. These memory pages are given colors, and only pages with the same colors can be mapped to the same cache set. The pages that are sequential in the virtual address space are assigned different colors, so they can’t be mapped to the same cache set.

There are both static and dynamic approaches to page coloring. A dynamic approach named Chameleon can provide secure coloring to secure processes, which allows it to maintain strict isolation in a virtualized environment. This prevents attackers from being able to access the victim’s cache.

Compiler-based mitigation techniques

One example of a compiler-based mitigation technique is Biscuit, which was developed by researchers from the Georgia Institute of Technology. According to their paper, Biscuit is able to detect cache-based side-channel attacks for processes scheduled on shared servers. One important aspect of Biscuit is its cache-miss model which the compiler inserts at the entrances of the loop nests.

The cache-miss model predicts the cache misses of the corresponding loop. It uses beacons to send the cache-miss information to the scheduler at run time. The scheduler then uses this information to co-schedule processes in a way that ensures their combined cache footprint is not higher than the maximum capacity of the last level cache. The scheduled processes are monitored to compare the actual cache misses against the predicted cache misses. When anomalies are detected, the scheduler searches to isolate the attacker.

In the paper, the researchers showed that Biscuit’s compiler-based mitigation technique was capable of detecting and mitigating a range of attacks, including the prime and probe as well as the flush and reload techniques that we mentioned earlier.

Redesigning hardware

General hardware-based mitigation techniques are limited, because they have large performance overheads that make their implementations impractical. These hardware-based mitigation techniques generally require the design of new hardware that minimizes the risks of loosely coupled architecture and the risks of shared resources becoming available.

One of the major threats comes from the existence of hardware timing channels. To counter these risks, it’s possible to introduce random noise into the system. This prevents attackers from being able to correlate their measurements with the true operations. However, as we stated above, this tends to be too inefficient to be practical.

Power monitoring side-channel attacks

Power monitoring side-channel attacks rely on an attacker having access to the power consumption of a cryptographic system. The power consumption of any device is beholden to the laws of physics.

This means that if an attacker monitors the power consumption of a cryptographic system while it performs various operations, the attacker may be able to use the information it gathers to figure out the secret keys. There are two different types of power analysis:

Simple power analysis

This technique involves graphing a cryptosystem’s current usage over time. The power consumption will vary as the system performs different operations. By analyzing the different power consumption profiles of various operations, an attacker may be able to deduce information about how the device operates, as well as its keying material.

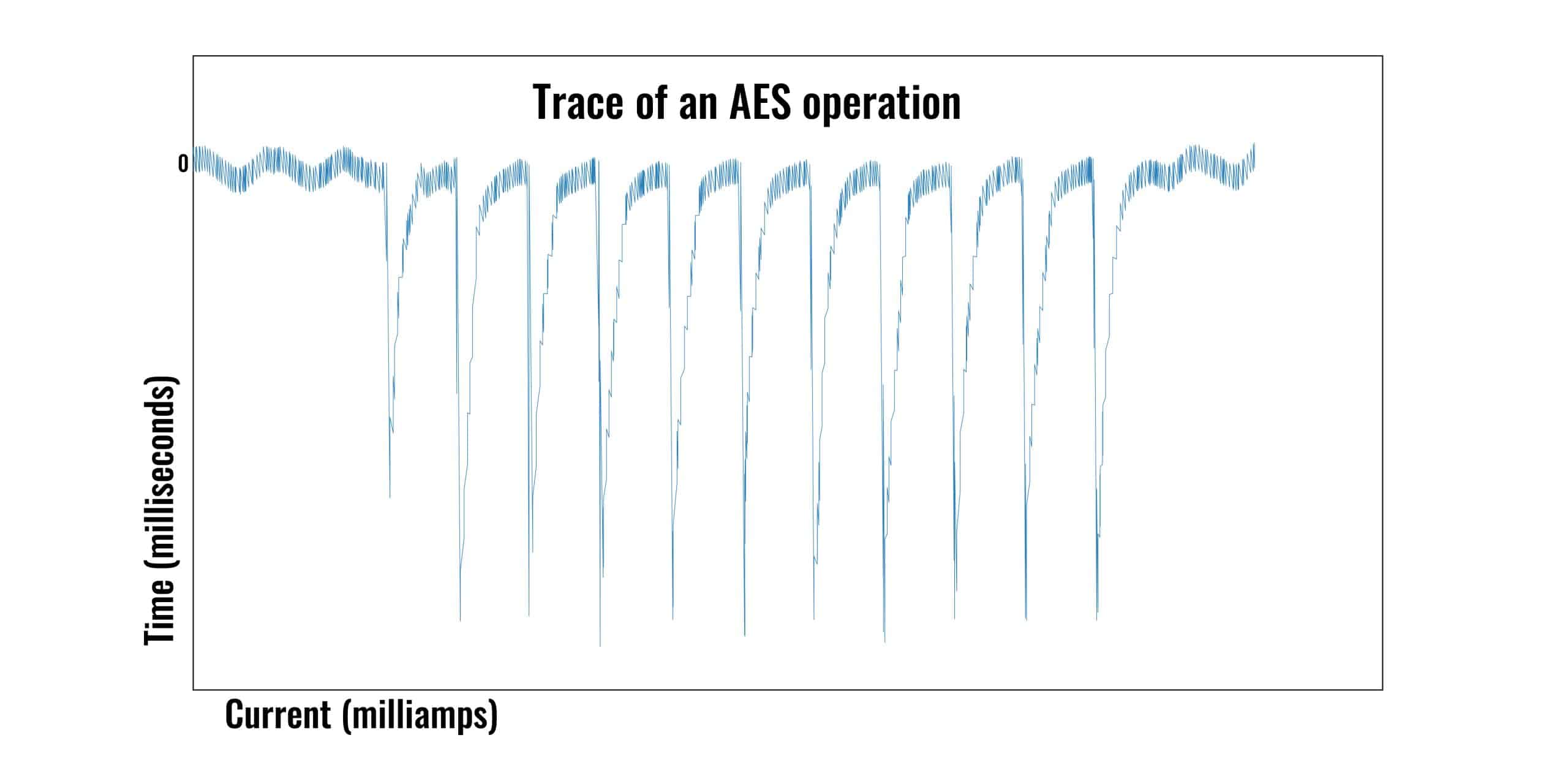

Under simple power analysis, an attacker takes a set of power information measurements over the course of a cryptographic operation. This series of measurements is referred to as a trace.

Let’s pretend an attacker was monitoring a cryptosystem as it performed an AES operation:

In the trace shown above, we have 11 spikes. The first is about two thirds as long as the rest, which are all about the same length. Each of the spikes is also roughly the same distance apart. The first spike is due to the power consumption that occurs when the plaintext is loaded into the register. The other 10 spikes indicate the current usage from each of the 10 rounds of the AES algorithm.

Just as the graph shows us very clearly when each of the 10 rounds of AES is occurring, if we were to zoom in, we would also gain insight into the minutia of these operations. The more an attacker zoomed in, the more information they could glean from the power consumption, which could reveal important information, such as details of the key schedule and the sequence of the instructions that are executed. Ultimately, this information may lead to the attacker breaking the cryptosystem.

Mitigating simple power analysis

Power analysis is generally done passively, so there isn’t normally a way for the cryptographic devices to detect whether or not it’s occurring. It’s also a non-invasive process, and together these factors make both auditing processes and physical shields unsuitable as defense mechanisms.

As a more secure defense mechanism, implementations of cryptographic systems should be designed so that there are no secret values that affect conditional branches (these are essentially programmed instructions that say “if x happens, branch to y”), within the software.

This is necessary because when cryptographic systems feature conditional branching in the execution of their software, it can leak information that may make it easy for attackers to figure out secret data. This is due to the fact that the power usage will vary according to which branch the cryptographic system takes. With careful monitoring, an attacker may be able to use this information to infer details about the underlying cryptosystem or the keying material.

Similarly, implementations need to be designed so that the following sources of variation don’t offer information that could be used to compromise the system:

- CPU instructions with variable timing – These variations give similar hints to the attacker.

- Microcode variations – Even in branchless code with constant timing, microcode variations may still feature data-dependent power consumption variations, which attackers can take advantage of.

- Various arithmetic operations – When these operations have large variations in their complexity, it also leads to wide variances in their respective power consumption. This information can also be exploited.

- Context switches on multithreaded CPUs and timer interrupts – Even though these variations are unrelated to the cryptographic processing, they can give off power information that may be useful to an attacker. However, the uses of this information tend to be more limited than the aforementioned examples.

Differential power analysis

Differential power analysis is a more sophisticated technique that is more difficult to defend against. The idea was first introduced by Paul Kocher et al. in their 1998 paper, Differential Power Analysis.

In the paper, the researchers described how there are additional correlations between power consumption and the operations being performed by the cryptosystem, in addition to those variations that we have already discussed in the section on simple power analysis.

While these other variations are generally smaller and can be overshadowed by noise and measuring errors, it is often still possible to use these measurements to break the cryptosystem. Differential power analysis uses statistical functions that are customized toward the specific cryptographic algorithm that an attacker is targeting.

In their initial paper, the academics described differential power analysis against the Data Encryption Standard (DES), because it was still a popular algorithm at the time of their research. The math behind how the attack worked against DES is complicated, so we won’t go into it.

Instead, it’s more important to note that because these variations are generally smaller and compete against more noise, differential power analysis techniques include error correction and signal processing properties. These make it possible for attackers to gain insights about the cryptographic operations that aren’t visible through simple power analysis.

In certain situations, this allows them to extract secrets and break cryptosystems that are secure against simple power analysis. Differential power analysis is also viable against other encryption algorithms, such as AES.

There is an even more advanced form of differential power analysis known as second-order differential power analysis. These attacks can use differing time offsets, a range of measuring techniques, and multiple sources of data. While they are theoretically capable of breaking some systems that are resistant to both simple and differential power analysis attacks, they are also far more complex.

Because most real world systems that are susceptible to second-order differential power analysis are also susceptible to normal differential power analysis, these attacks are mainly studied by researchers and aren’t frequently seen in the wild.

Mitigating differential power analysis

The more sophisticated nature of differential power analysis makes it much more difficult to defend against in comparison to simple power analysis. This is because even if the most obvious correlations between the power consumption and cryptographic operations have been avoided, there still may be some subtle hints in the data.

With the right statistical analysis, these could give away details of the cryptosystem’s operation and its keying material, which may ultimately result in the system being compromised.

Despite this, there are some approaches that aim to defend against differential power analysis. These include:

- Reducing the size of the signals – Minimizing the size of the signals can help to reduce the quality of the information that’s available for the statistical analysis, in the hope of making these attacks no longer viable. Cryptosystem designers can accomplish this by selecting operations that have constant execution path code and that leak less information via their power consumption. They can also use Hamming weights, which are strings comprised of symbols other than zero that appear in the code.

- Adding noise – Adding noise into the power consumption measurements increases the number of samples an attacker would need to mount a successful attack. When a sufficient amount of noise is added, it can make differential power analysis unfeasible. Randomizing execution timing and order can also help to make these attacks unfeasible.

- Design cryptosystems with realistic assumptions about the hardware they operate on – Side-channel attacks use information from the hardware to break the cryptosystem, so it’s important to consider its weaknesses when designing cryptosystems. One defense against these weaknesses is to include key counters that stop attackers from being able to gather large numbers of samples. Another way to prevent attackers from collecting data on large numbers of executions is to aggressively use exponent and modulus modification processes in public-key schemes. Nonlinear key update procedures can also remove the correlation between power use and specific operations.

Electromagnetic attacks

Electronic devices emit electromagnetic radiation. This is due to the fact that wires carrying current emit magnetic fields, so whenever a device is being used, it creates these small amounts of electromagnetic radiation. When cryptosystems run operations, the amount of electromagnetic radiation can vary according to the type of operation.

Attackers can measure the electromagnetic radiation of these different operations over time, then use these insights to gain information about the cryptosystem and its keying material. Ultimately, an attacker may be able to leverage this information to compromise the cryptosystem and access sensitive data.

Electromagnetic attacks can be done in a non-invasive manner, however depackaging a chip may help to improve the signal. This may make it easier to break the cryptosystem. Examples of electromagnetic attacks include the Bell Labs discovery and van Eck phreaking, which we discussed in The history of side-channel attacks section.

Simple cryptographic devices like smart cards and field-programmable gate arrays tend to be the most vulnerable to electromagnetic attacks. While there are also technical attacks against smartphones and computers, these complex devices emit too much noise from their other processes, which tends to make electromagnetic attacks less appealing than alternatives like exploiting software vulnerabilities.

Simple electromagnetic analysis

The most basic type of electromagnetic attack is simple electromagnetic analysis. This involves an attacker directly measuring the electromagnetic activity. In order for these attacks to succeed, attackers need a deep understanding of their targeted algorithm and the way that it has been implemented.

Attackers measure the electromagnetic radiation emitted by various cryptographic operations over time, and graph this information in what is known as a trace. These traces of the electromagnetic radiation can give them details about the cryptographic operations, and even lead an attacker to figure out the keying material.

The underlying concept is that cryptographic algorithms run in a defined sequence of varying operations. When the electromagnetic radiation is graphed in a trace, it’s possible to recognize these operations based on the spikes of activity. This works much the same as in the simple power analysis technique we discussed above.

In some cases, attackers may be able to compromise the system from a single trace of electromagnetic measurements. Attackers may also use multiple traces in their attempts to deduce the secrets of the cryptosystem. The advantage of multiple traces is that they allow attackers to reduce the amount of noise in their measurements. These are known as differential electromagnetic analysis attacks.

Differential electromagnetic analysis

Differential electromagnetic analysis may be useful in instances where simple electromagnetic analysis didn’t yield enough information, or if there was too much noise in the measurements. Another advantage is that these attacks don’t require as much knowledge about the targeted cryptosystem in order for them to be effective. They are also viable against symmetric-key encryption implementations.

While these attacks do have additional uses in comparison to simple electromagnetic analysis, they are also more complex, which makes them more difficult to mount.

Mitigating electromagnetic analysis attacks

Most electromagnetic analysis attacks require the attacker to be in close proximity to the device. This means that securing the location from attackers can secure the cryptosystem. Another method of mitigating electromagnetic analysis attacks is to physically shield the electromagnetic radiation. The underlying hardware can be designed with shielding, such as a Faraday cage, which will reduce the signal’s strength.

Attackers often depackage chips to make the signal stronger, which means that the security can be enhanced by gluing the chip. If the chip is glued in such a way that it can’t be removed without being destroyed, it prevents attackers from being able to depackage it.

Another option is to design the system so that there is no longer a correlation between the cryptographic algorithm and electromagnetic radiation. This can be accomplished through clock cycle randomization, randomizing the order of bit encryption, and process interrupts.

Other side-channel attacks

There are many other types of complex side-channel attack, but for the sake of brevity, we won’t cover them all in-depth. These others include:

- Differential fault analysis – This type of side-channel attack involves an attacker attempting to cause faults in the system in the hope that this may reveal information regarding the system’s internal state.

- Thermal imaging – Thermal imaging attacks monitor the heat generated by the system, and attempt to correlate the temperature with the operations that are occurring.

- Optical attacks – These attacks can be used to monitor and record the visual signals that are emitted during cryptographic operations. One example is the LED lights on many computers that can correlate with hard disk drive activity.

- Allocation-based side-channel attacks – Each of the previously discussed side-channel attacks can be seen as consumption based. The information leakages in these attacks are based on the number of resources that are consumed in a cryptographic operation. Allocation-based side-channel attacks differ in that the attacker monitors the number of resources that have been allocated to a process, not those that have been consumed. They then use this information in an attempt to break the system.

Each of the above attacks operates according to similar principles as the other side-channel attacks we have covered. In essence, when secure algorithms are implemented without the proper mitigation techniques, each of these channels can allow information about the cryptographic operations to leak.

If an attacker monitors and analyzes this information, they can use it to figure out details of the cryptographic system and the secret keys, ultimately allowing them to compromise the system’s security.

Secure algorithms still need secure implementations

Having an understanding of side-channel attacks is critical for securing a system. It’s easy to assume that as long as a device is using a secure algorithm like AES-256, that it will be secure purely due to the strength of the algorithm. While AES-256 is still considered secure as an algorithm, the true security of the device is also dependent on its implementation.

If an algorithm like AES-256 has been implemented without taking these side-channel attacks into consideration or deploying the mitigation techniques we have discussed, then it may still be vulnerable to attackers.

This is one of the reasons for the common adage in the cryptography community, “Don’t roll your own crypto”. It basically means, “Don’t implement your own cryptographic systems (unless you are an expert and your work will be reviewed by teams of other experts).”

While it’s easy to build a cryptosystem that you can’t personally figure out how to break, that doesn’t mean that no one else will be able to break it. Given just how many geniuses are out there, the immense complexity of these systems, and the many avenues for side-channel attacks, it’s critical that any implementation is put through a rigorous review process.

If this doesn’t take place, it makes it far more likely for a clever hacker to sneak into the system using one of the many side-channel attacks, or through other techniques. Ultimately, this can put all of the data that’s protected by the system at risk.

Great thanks for the excellent article.