The CIA triad is simply an acronym for confidentiality, integrity and availability. These are three vital attributes in the world of data security.

The CIA triad has nothing to do with the spies down at the Central Intelligence Agency. While this may be a bit of a letdown, we can still shoehorn some kind of reference to espionage for you. If you don’t have the right security controls in place to provide confidentiality, integrity and availability, then your data will be an easy target for the real CIA, the NSA, cybercriminal groups, basement hackers and all manner of other online adversaries. These principles are critical for keeping our information both secure and usable.

The CIA triad is an important concept in information security, so let’s give you a quick rundown of what each of these security attributes actually are. We’ll delve into them in more depth later on:

- Confidentiality is the property of restricting everyone from accessing systems or data except authorized users. Essentially, keeping data confidential means keeping it a secret.

- Integrity means that data must be complete and accurate, and that it hasn’t been corrupted or tampered with.

- Availability refers to the data being accessible when it is required.

All three of these security attributes are critical if we want to keep attackers out of our data, while still being able to access it when we need it.

The History of the CIA Triad

The earliest mention we can find of the confidentiality, integrity and availability properties being closely linked together seems to date back to a 1977 publication from the National Institute of Standards and Technology (NIST). In Post-processing audit tools and techniques, NIST defined computer security as:

The protection of system data and resources from accidental and deliberate threats to confidentiality, integrity, and availability.

However, the concepts themselves go back much farther. One of the earliest pieces of evidence we have for encrypting data to provide confidentiality goes back to 1500 B.C. in Mesopotamia. A craftsman had used the technique to hide a recipe for a particular pottery glaze that was highly sought-after. We can presume that the practice of keeping certain information confidential goes back much earlier than this date, because it seems unlikely that people would have just blurted out their secrets at every opportunity prior to this point in time.

During the lifetime of Julius Caesar, encryption was used to keep military and other secrets confidential. He even has his own cipher named after him, the Caesar cipher, which is a simple substitution cipher that involves encrypting a message by shifting each of its letters a certain number of places to the left or right.

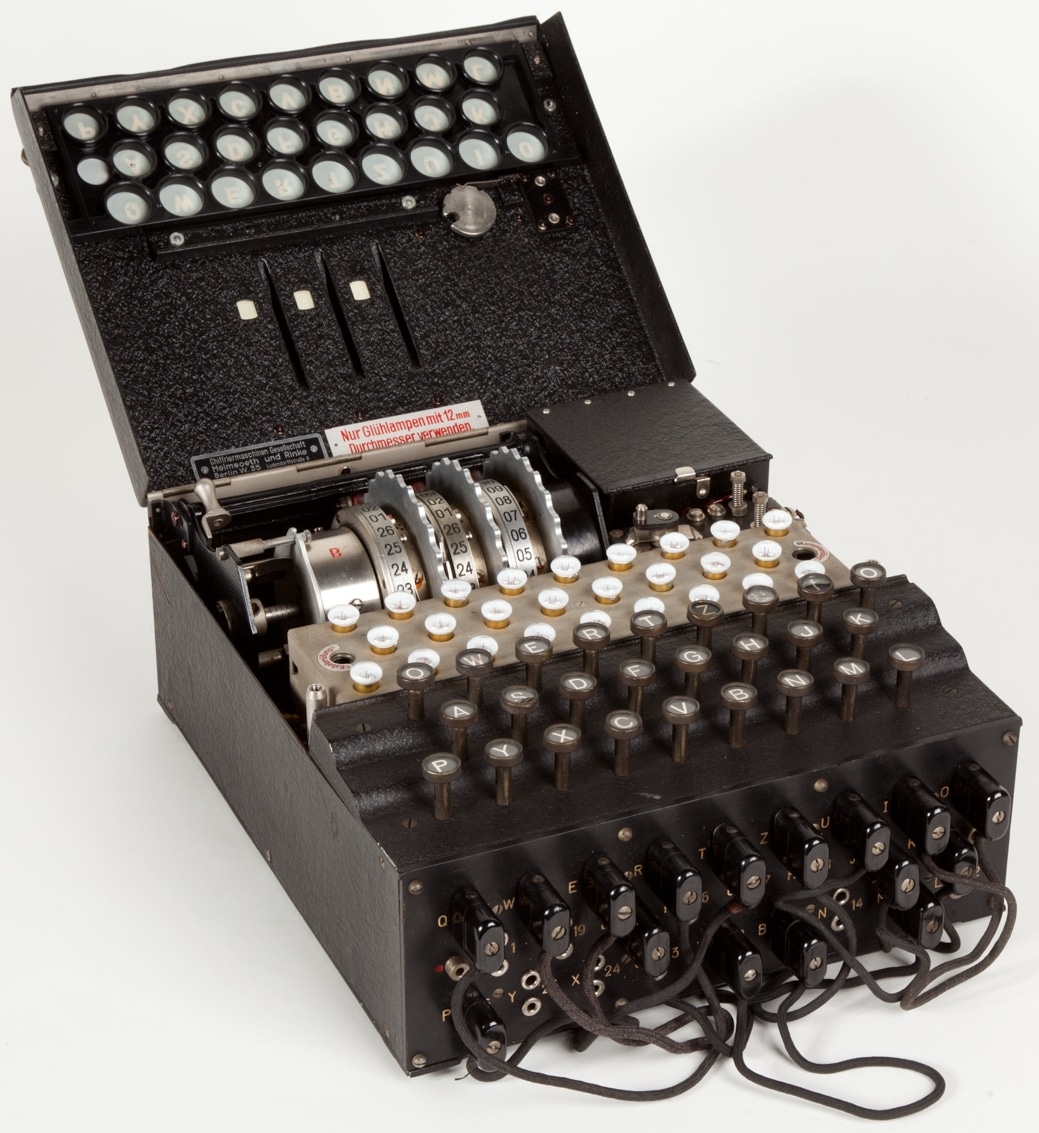

Over time, the techniques for keeping data confidential became more sophisticated. The advancement was kicked up a notch during the 20th century, with the likes of the Enigma Machine being heavily used during World War II.

The Enigma machine was used by the Germans during World War II to encrypt and decrypt their messages. Enigma (crittografia) – Museo scienza e tecnologia Milano by the Museo Nazionale della Scienza e della Tecnologia Leonardo da Vinci licensed under CC Attribution-Share Alike 4.0 International l

The ideas around confidentiality began to be formalized and studied more vigorously in the latter half of the century, once computing began to become more prevalent. One of the earliest papers covering confidentiality in the realm of computing was a 1976 report prepared for the United States Air Force.

As computers became faster and more of life’s everyday tasks became digitized, the need for confidentiality in the digital realm gained prominence. Encryption techniques made major leaps in the ensuing decades, because the advances in technology meant that previous solutions could no longer secure data against well-equipped adversaries.

Similarly, practices to safeguard the integrity of information probably go back much longer than any of our records regarding them do. One of the earlier papers that highlighted just how important integrity was in the information technology space was 1987’s “A Comparison of Commercial and Military Computer Security Policies”.

More advanced techniques that allow people to verify the integrity of data, such as digital signatures, were proposed in the late seventies, but didn’t start to see much adoption until the late eighties and beyond.

The history of availability is a little harder to pin down. When data only existed in a more physical form, it was available if you had access to the original, or perhaps a carbon copy. Ben Miller from the cybersecurity company Dragos proposes that the introduction of the Morris Worm was a pivotal moment in how we think about availability in the digital world.

In 1988, the Morris Worm rapidly expanded across the internet, taking down a large portion of online machines. Although it’s estimated that only between a couple of thousand and ten thousand computers were taken down by the worm, this is at a time when the internet was minuscule in comparison to today.

As one of the first major incidents to bring a significant portion of the internet down and make large quantities of data inaccessible, the Morris Worm may have made many in the industry reflect on what systems they could implement to make data access more resilient and ensure availability.

What is a security attribute?

We mentioned that confidentiality, integrity and availability are all important security attributes. But what exactly is a security attribute?

Security attributes are the properties that we need in order for the data and its environment to be considered secure. They can be seen as objectives that we should aim for whenever we are building or maintaining a security system. They give us the goals we need to strive toward, and if we achieve them to a reasonable degree, we can be relatively confident that our data is both well-protected and still usable.

Security attributes are also often referred to as security properties, security principles, security concepts, security goals, security qualities, and a number of other terms.

In the real world, we have a limited amount of skills, time and resources. There are also situations where these attributes come into conflict, such as when confidentiality mechanisms make it more challenging to deliver an acceptable standard of availability. A more concrete example would be if the security controls that protect data are too complex for employees, making the system too hard for them to use.

In many cases, the worst difficulties can be avoided with careful design. But these realities do mean that we often need to make trade-offs and prioritize certain security attributes above others. This will vary according to the situation, and organizations will have to make their own decisions regarding which trade-offs they make, and when certain attributes should be prioritized above others.

This is why organizations need to do a risk assessment and put the appropriate security mechanisms in place for their own individual threat model. The White House deals with far more valuable information than the rest of us and it has much more dedicated adversaries. This means that it faces much greater threats. Therefore, it requires much stronger defenses than your average plumber or accountant needs.

What is confidentiality?

Information confidentiality is relatively straightforward. It’s the property of only having data and resources accessible to those who are authorized to access it, and keeping it a secret from all other parties.

This means that for information to maintain a truly confidential state, no one else should be able to look at it or get their hands on it, whether they are dedicated hackers or just the nosy old lady down the street. Confidential data must be kept confidential at all times, meaning that we need to find ways to keep unauthorized parties from accessing it while it is in storage, in transit, and even during processing.

Attackers have a range of different tactics at their disposal that they can use to gain access to data and breach its confidentiality. These include but are not limited to:

- Stealing an unencrypted hard drive or laptop

- Planting malware on a device

- Performing a SIM swap and taking over an account

- Social engineering attacks like phishing

- Port scanning

- Capturing network traffic

- Stealing passwords and other credentials

- Shoulder surfing

With a lot of unscrupulous people on the internet and a whole bunch of different techniques through which they can breach the confidentiality of data, we need to pay careful attention to the security mechanisms that we use to prevent unauthorized access.

But it’s not just attackers that we need to worry about. Accidents, oversight and other types of neglect can also lead to unauthorized breaches of confidentiality. These can include things at the individual level, like leaving sensitive documents in the printer tray or forgetting to encrypt a message. At a higher level, mistakes that breach confidentiality can include misconfiguring security controls or other administrative screw ups.

Even if these actions aren’t intentional, they can still have dramatic consequences for the individuals affected by the breach and the organization that was responsible. An unintentional breach may leave the affected individuals vulnerable to harmful acts such as fraud, while the organization responsible for it may face legal penalties and a range of other costs.

Following the appropriate security practices can help to limit the risks of the threats from both accidents and attackers, as well as help to reduce the chance of your data’s confidentiality being violated.

How do we keep data confidential?

If you have secret plans to take over the world and you want to keep them confidential, you could keep the plans locked in a room. As long as you are the only one with a key and no one manages to break in, you can assume that your secret plans maintain their confidentiality.

Restricting physical access like the example above is one way that we can keep data confidential. In the digital environment, we tend to use encryption algorithms like AES and RSA, alongside a range of other ciphers.

These algorithms work fairly well and it’s not feasible for attackers to break them at this stage. However, secure algorithms like AES and RSA still need to be implemented correctly in order to be secure. Another major caveat is that algorithms like AES and RSA can only provide confidentiality if the keys that encrypt the data aren’t compromised. If an unauthorized party gets their hands on the keys, these encryption algorithms can no longer keep the data private.

Encryption algorithms are generally rounded out with measures such as proper employee training, access control mechanisms, authentication systems and data classification.

What is integrity?

Now that we’ve discussed confidentiality, it’s time to get to the I in the acronym, integrity. When we talk about integrity, we are referring to protecting the accuracy and correctness of data. We need to have measures in place to make sure that data isn’t changed, altered or corrupted.

Of course, this doesn’t mean that we can’t change our data ourselves—intentional and authorized modifications aren’t considered a breach of integrity. We are primarily concerned about stopping, detecting and rectifying unauthorized or accidental violations of data integrity.

Attackers may violate the integrity of data by unleashing viruses, by breaching the security systems and tampering with it, or even trying to pass off fraudulent data as the original data.

Errors from authorized users, such as accidentally deleting or altering files, running faulty scripts, and entering invalid data can also lead to the breach of data integrity. Administrators may also misconfigure the systems, leading to larger scale information integrity issues. Data can also become corrupted through transmission or through long periods spent in storage, so it’s important that we can detect and rectify these breaches of integrity as well.

Does encryption provide integrity?

In certain cases, encryption may be able to help preserve a message’s integrity. After all, if an attacker can’t access the plaintext data, it makes it harder to tamper with it.

However, preserving data integrity is not what encryption is designed to do, and it should never be the sole mechanism relied upon for providing integrity.

There are a couple of reasons for this. One is that certain stream ciphers are malleable, which means that an attacker may be able to manipulate data without knowing the encryption key for it. This makes it possible for an attacker to compromise the integrity of data, without violating its confidentiality.

Another way that an attacker could violate the integrity of communications is if they swap an encrypted message for a previously-seen encrypted message.

To give a simplified example, let’s say that an attacker is intercepting and relaying messages sent between two parties, Alice and Bob. Let’s say that Alice and Bob are using a Caesar cipher for encryption, just to keep the example easier to understand. The plaintext of the encrypted conversation could be something like this:

Alice: Do you think Stephanie is bad?

Bob: Yes.

Alice: Should I still give Stephanie $100?

Bob: No.

If we use a Caesar Cipher to shift each letter and character one space to the right (so that A becomes B, B becomes C, C becomes D, etc.. Note that the exclamation mark is one character to the right of the space in ASCII encoding, which is where the exclamation marks come from). The encrypted version of the conversation would look like this:

Alice: Ep!zpv!uijol!Tufqibojf!jt!cbe@

Bob: Zft/

Alice: Tipvme!J!tujmm!hjwf!Tufqibojf!%211@

Bob: Op/

Even if the attacker couldn’t figure out the cipher and read the messages, they could still violate the integrity of the conversation by simply relaying the first reply twice and omitting the second message. If they did this, the conversation would look like this:

Alice: Ep!zpv!uijol!Tufqibojf!jt!cbe@

Bob: Zft/

Alice: Tipvme!J!tujmm!hjwf!Tufqibojf!%211@

Bob: Zft/

This means that when the final message is decrypted, the recipient will have seen the following conversation:

Alice: Do you think Stephanie is bad?

Bob: Yes.

Alice: Should I still give Stephanie $100?

Bob: Yes.

As you can see, even though the attacker may not have actually known what exactly they were doing, they have still managed to violate the integrity of the conversation and may end up causing the recipient to do something that they shouldn’t have done. This is another vital reason why we cannot rely on encryption to maintain the integrity of data.

Mechanisms for protecting data integrity

Due to threats like those outlined above, data also needs protection mechanisms in order to ensure its integrity. There are a range of different measures that can help to maintain the integrity of data within a given security system.

These measures may also help to protect other security attributes, just like how encryption can assist in maintaining integrity, but its main purpose is to act as a confidentiality mechanism. The different security controls need to work in conjunction with each other in order to provide integrity. In isolation, there may be ways that attackers can circumvent them or that errors can occur.

Measures that can help to maintain data integrity include:

- Digital signatures.

- Cryptographic hash functions, checksums and message authentication codes.

- Encryption.

- Access controls like identification, authentication, authorization and accountability.

- Intrusion detection systems.

- Employee training.

What is availability?

Finally, we get to the A, availability. Confidentiality and integrity are fairly obvious; we need mechanisms in place to ensure that hackers are kept out of our data, and that they don’t make any unauthorized changes. The importance of availability may be less apparent, but we can make it clearer through an analogy.

An analogy for availability

Let’s say that you don’t trust banks and you want to secure all of your money yourself. You could lock it in a box, go out to the middle of the forest and then bury it 10 feet deep. As long as no one follows you and you don’t leave the ground clearly disturbed, your money should be pretty safe. No one else is likely to find it, so its location is confidential, as is the amount of money that you put in the box. The money should also maintain its integrity if it’s locked in the box all by itself, deep underground.

This strategy for keeping your money safe may be effective, unless you actually want to use it to buy things. Trudging out to the forest, digging down 10 feet and then trudging back isn’t really a practical option every single time you want to buy bread.

While your money may be confidential and maintain its integrity, it isn’t readily available. While this may be an effective technique for pirates burying their treasure until the next time they sail past, it simply isn’t a practical way to store money that we regularly need to use.

The same concept applies to data. If it’s data that we need to use regularly, it also needs to be reliably available. You could protect your information with an encryption algorithm so strong that it would take every computer in the world 10 billion years to decipher. This would guarantee you an extremely comfortable security margin.

But if the encryption algorithm was too heavy duty, it may also take you way too long to decrypt, meaning that your information isn’t readily available when you need it. This is the data equivalent to burying it in the forest—while the confidentiality and integrity may be protected, it’s ultimately an impractical system.

If your security mechanisms make it too hard for authorized personnel to access data, the entire system may be useless. This goes back to the trade-offs and prioritization of security attributes that we mentioned earlier in the article.

The availability attribute

In more technical terms, when we talk about the availability attribute, we mean that we want authorized users to be able to access data in a timely manner, without interruptions, when they need it.

This is critical for being able to carry out tasks—if data isn’t reliably available when it’s required, how could we get anything done? How could anyone trust a company’s service if they never knew whether it would actually be working or not?

To carry on from our example of a heavy-duty encryption algorithm, in order for the data to be considered sufficiently available, we would need enough computing power in order to access the data within a reasonable and consistent time frame. If we didn’t have the necessary computational resources, we would have to reconsider our entire security system to find a way to adequately protect the data while still giving us the availability that we need.

There are a lot of things that can impact availability, including:

- DoS or DDoS attacks — An attacker may launch denial-of-service attacks or distributed denial-of-service attacks to overwhelm a server, meaning that legitimate traffic cannot get through.

- Server failure — Individual servers may go down because of things like a failed disk, or being overloaded.

- Large-scale outages — Entire server farms can go down because of power outages, extreme weather events, and other major disasters.

- Loss, theft or failure of a hard drive — Data may become unavailable if it is only stored on a single drive and there are no available backups.

- Ransomware — Hackers can breach an organization’s systems and lock up its important data, holding it ransom until the company pays them. Often, the hackers don’t even send the company the key for decryption upon receipt of the payment, meaning that the data can become permanently unavailable if it hasn’t been backed up.

- Network issues — If communication is interrupted between various parts of the network, data and systems may become unavailable.

- Software failure — Errors in the code could lead to data becoming unavailable. Attackers may also exploit flaws to make systems and data unavailable.

- Accidental modification or deletion — Files and folders may be accidentally modified or deleted, causing the original version to no longer be available.

- Incorrectly classifying objects — If data or other objects are classified incorrectly, they may not be easy to retrieve when needed, causing them to be unavailable.

How to make data available?

As we have just shown, there are a wide range of problems that can lead to data becoming unavailable, whether it is unintentional or through a deliberate attack.

Because there is such a large variety of things that can go wrong, we have to carefully design our systems with a number of different measures to help ensure that data is available as much as possible.

Unfortunately, we can’t guarantee that data will always be available. Even internet giants with all of the resources in the world like Facebook and Amazon experience downtime every now and then.

In order to have our data reliably available as much as possible, we need to create resilient systems. These should include:

- Infrastructure that has the capacity to handle strains and high demand.

- System design that avoids single points of failure.

- Redundancies for all critical systems. These should be tested regularly.

- Backups for all important data. There should be multiple copies of everything, including at least one stored at a separate site.

- Business continuity plans for crises.

- Access controls like identification, authentication, authorization and accountability.

- Tools for monitoring network traffic and performance.

- Denial-of-service attack protection mechanisms.

The CIA triad vs other security models

The CIA triad of confidentiality, integrity and availability are essential security principles, but they aren’t the only ones that are important to consider in a modern technological environment.

Concepts of security have evolved over the years, and while the CIA triad is a good starting place, if you rely on it too heavily, you may overlook other critical aspects of a security system. Other ways to look at important security attributes include:

The Parkerian hexad

Another common security model is the Parkerian hexad (like a triad, but with six elements instead of three), first proposed by Donn Parker in 1998. The Parkerian hexad includes:

- Confidentiality — The Parkerian conception of confidentiality is much the same as that of the CIA model.

- Possession/Control — This refers to protecting data from falling under the control of an unauthorized entity. One example where this is important is in copyrighted material. This type of data doesn’t really need to be confidential, but there do need to be mechanisms in place to prevent unauthorized access.

- Integrity — The Parkerian view of integrity is similar to the one discussed earlier in this article.

- Authenticity — If the security attribute of integrity focuses on the content of information maintaining its correctness (many would argue that integrity is not limited in this way), Parker added the attribute of authenticity as an assurance that we know the source of data. In essence, if data is authentic, it was created by the person who claims to have created it, and not by an attacker or some other impostor.

- Availability — Availability is also essentially the same in the Parkerian hexad as it is in the CIA model.

- Utility — In the Parkerian Hexad, utility is concerned with the usefulness of data. One example to help get Parker’s perspective across is to ask, “Is the data is in a format that can be used?” Imagine a scenario where there is encrypted data in storage and an employee has the key to access it. This could tick the boxes for each of the five other security attributes. But what if the storage device was a magnetic tape, and the employee didn’t have the necessary device or skill set to get it off the tape? We could say that the data was technically available, but not in a useful format to the employee. Situations like these are why Parker added the additional attribute of utility. However, there is also a valid argument to simply keep usefulness under the umbrella of availability, rather than give it its own separate category.

ISO/IEC 27001:2013

The International Organization for Standardization (ISO — it’s not technically an acronym for political reasons, which you can check out here if you’re curious) document ISO/IEC 27001:2022 has yet another way of looking at things. It defines information security as the:

“…preservation of confidentiality, integrity and availability of information; in addition, other properties such as authenticity, accountability, non-repudiation and reliability can also be involved”

While it mostly sticks to the CIA triad, it does indicate that authenticity, accountability and non-repudiation can be important aspects. However, they clearly take second billing to the CIA attributes in this definition.

The ISO is the leading global standards body, and this particular publication is one of the most important documents for information security management systems. The point of these details isn’t to bore you with the drudgery of international government bodies, but simply to highlight that the different ways of looking at security models and security attributes go all the way to the top.

The (ISC)2 CISSP: Certified Information Systems Security Professional Official Study Guide

The (ISC)2 CISSP: Certified Information Systems Security Professional Official Study Guide is a book designed to help people pass one of the more respected qualifications in the field of cybersecurity. Its take on security attributes is slightly different once again.

It includes security principles like non-repudiation, which refers to the inability to deny. We’ll expand on this in greater detail later. Other principles included under the umbrella of integrity include authenticity and accountability. Although the guide adds these principles in, they do not have the same level of prominence as the CIA triad.

The DIE Triad

The DIE — Distributed, Immutable, Ephemeral — Triad is the most recent attempt to update the CIA Triad. It was created in response to the increasing reliance on cloud technology involving vast amounts of data.

Its proponents argue that the CIA Triad will largely be negated if systems are designed to be:

- Distributed — This prevents over-reliance on a single system

- Immutable — This makes assets impossible to change

- Ephemeral — This involves designing assets to have a short and defined lifespan

Which model should you follow?

Each of these models examine security from slightly different perspectives, and they may categorize certain aspects of it in ways that disagree with or conflict with another model. However, when you dig deep into the pages of each of the models we just highlighted, they all cover much the same information in one way or another. It’s often just categorized or grouped in a different way.

There may not necessarily be a correct way of looking at security, but it’s important that you are aware of each of these properties and that any security system you build takes them into account.

If you implement security mechanisms that verify data integrity, but overlook an important concept like authenticity, you may leave open a window that allows hackers to breach or undermine your system. As long as you consider all of the security attributes, it’s less important whether you categorize them in precisely the same way as one security model or another.

The differences between these major security models may just be issues of semantics and taxonomy, and it’s best not to get caught up bickering over where exactly the line is.

You could view these arguments like two zoologists bickering over whether the American herring gull is its own species, or simply a subspecies of the herring gull. Meanwhile, they are completely oblivious to the grizzly bear that stands right behind them, licking its lips.

As long as each security attribute is addressed somewhere in your system, it’s best to focus on protecting against the real dangers, rather than worrying about such petty squabbles.

Whether you include the following concepts somewhere within the CIA triad like the CISSP documentation does or not, it’s much more important to make sure that they are addressed in your security model. The real danger comes from leaving them out, not from a slight semantic or taxonomic disagreement.

Other important security attributes

In our discussion of various security models, we just mentioned a number of different security attributes that we haven’t covered yet. Now it’s time to discuss the two most important ones, non-repudiation and authenticity. While they may not have made it into the CIA acronym, overlooking them can lead to your security system being easily compromised.

Non-repudiation

Non-repudiation is one of the most critical security attributes that we haven’t discussed yet. But before we can explain what non-repudiation is in the cybersecurity context, we will make sure you have a clear idea of what repudiation is.

What is repudiation?

Let’s say you catch a criminal stealing candy from a baby. You see them with your own eyes, but no one else is around you and there is no other evidence. You summon a police officer and you tell her that you saw the thief stealing candy from the baby.

The police officer then goes to the thief to get their side of the story. The police officer confronts the thief with your claim:

“This witness says that you just stole candy from a baby.”

What does the thief say in response? It’s a master legal defense that even the slickest lawyer would think is a stroke of sheer brilliance. They utter:

“No I didn’t.”

With three simple words, the thief has created an impossible mountain for you and the police officer to climb. With no other evidence, it’s simply your word against theirs, and reasonable doubt would weigh heavily on the case. The thief would get off.

So what is this master legal defense? In lawyer-speak, it’s called repudiation, and it basically means to refuse to accept the truth of something. The thief has denied the truth of your statement. They have repudiated your claim that they stole candy from a baby.

When it comes to cybersecurity, repudiation can also be problematic. What if you had some top secret plans in your organization, and due to an administrative error, a normal user account was able to gain access to the documents, even though they shouldn’t have been able to?

When you bring the employee into the office to reprimand them about it, you don’t want them to be able to repudiate their actions and just say “No I didn’t” when you confront them about accessing the documents.

In the information security world, non-repudiation involves having a system in place that basically prevents people from saying “No I didn’t” when they did in fact do the specified thing. In more technical terms, non-repudiation is a security attribute that means an entity cannot deny having been involved in or responsible for an activity or an action.

If a system has the property of non-repudiation, an entity can’t falsely claim that:

- They didn’t actually change or delete data that they really did modify.

- They didn’t send a certain message that they actually did send.

- They didn’t commit a range of other acts that they actually did commit.

Measures for implementing non-repudiation into a system

The security attribute of non-repudiation can be brought into a system through the following five separate processes:

- Identification — When entering a secure system, an entity must claim an identity. In digital systems, this is often done through usernames.

- Authentication — The authentication process involves an entity proving that they are who they claim to be. In the case of proving that you are truly the owner of a given username, this process often involves entering secret information that only you should know, such as a password or the answers to security questions. It can also include additional authentication factors, such as proving that they own something of the user’s such as a security token or an authentication app.

- Authorization — Once a user has authenticated themselves, the system must determine which resources that user is permitted to access. It must deny access to any resources that the user is unauthorized to access, and only grant access to resources that the user is authorized to access.

- Auditing — Taking logs of the activities within the system. This is critical for non-repudiation. The identification and authentication processes have given the system a way to identify who is making certain actions. The system simply needs to keep records of every user’s actions within the system so that a user cannot repudiate anything that they do.

- Accountability — Regularly reviewing the logs to detect violations and to make sure that compliance requirements are met. This allows users to be held responsible for any wrongdoings.

The above five processes help to provide non-repudiation to a system. A user cannot simply claim “No I didn’t”, when the system has the records that show what the user really did.

Note that authentication is a process, while authenticity, which we will discuss in the next section, is a security attribute.

Authenticity

As a security property, authenticity is concerned with whether an entity is truly who it claims to be, and thus whether their actions and data can be attributed to them or not. Data is deemed authentic if it can be verified that it originated from the entity claiming to be responsible for it.

As we mentioned earlier, the (ISC)2 CISSP: Certified Information Systems Security Professional Official Study Guide categorizes authenticity within the realm of integrity. It’s certainly possible to look at authenticity as a subset of integrity.

When we discussed integrity earlier, we were mainly concerned with the integrity of the data itself. However, we could also consider the integrity of the data’s authorship. It really depends on how you want to split hairs, but it wouldn’t be unreasonable to suggest that a given message would lack integrity if it was created by someone other than the party that was claimed.

Measures for proving authenticity

Regardless of the semantics, it’s important that we are able to verify who created, altered, or is responsible for certain data and actions within a system. There are a number of ways that we can achieve this. More broadly, we can use the processes of identification, authentication, authorization, auditing, and accountability that we discussed in the prior section.

Individual mechanisms that can be involved in proving authenticity include:

- Digital signatures.

- Message authentication codes.

- Usernames and passwords.

- Security questions.

- Security tokens and authentication apps.

- Biometric identifiers.

The CIA triad: Are confidentiality, integrity and availability enough to keep your systems secure?

Confidentiality, integrity and availability are often viewed as the primary security attributes because of the critical roles that they play in a system’s overall security. However, it’s important to keep in mind just how broad, complex, and interwoven each of these attributes really are.

If you stick to a narrow definition of each of them, or neglect to see how they interact and overlap, your conception of a security model will be more limited, which in turn can endanger any systems that you attempt to secure.

The best approach is to understand that the CIA triad is a simple shorthand for three important yet complicated properties that are required for secure systems. There are many more properties that can be important for certain systems, including some that we haven’t even been able to mention.

Beneath all of these high level properties and security goals, there are the many individual security controls, mechanisms, processes and policies that all work together to make up a secure system.

To put it as briefly as possible, security is complex. That’s what makes it so hard to get it right.