Few factors are as important when measuring network performance as speed. The speed at which packets travel from sender to recipient determines how much information can be sent within a given time frame. Low network speed leads to a slow network with applications that move at a snail’s pace.

In brief, throughput is a term used for how much data can be transferred from the source to its destination within a given time frame, while bandwidth is the term used for the maximum transfer capacity of a network.

We’re going to look at these two concepts in further detail below.

Here is our list of the best tools for throughput and bandwidth analysis:

- Paessler PRTG Network Monitor EDITOR’S CHOICE A collection of sensors that includes a monitor for traffic activity, showing throughput, bandwidth, and latency. Runs on Windows Server.

- Site24x7 Network Traffic Monitoring (FREE TRIAL) This cloud-based network traffic monitor uses flow protocols to extract traffic data from switches and routers and provides capacity planning utilities.

- SolarWinds Network Bandwidth Analyzer Pack This package contains two tools that monitor the statuses and performance of network devices and track network bandwidth utilization. Runs on Windows Server.

What is Throughput?

Throughput is the name given to the amount of data that can be sent and received within a specific timeframe.

In other words, throughput measures the rate at which messages arrive at their destination successfully. It is a practical measure of actual packet delivery rather than theoretical packet delivery. Average data throughput tells the user how many packets are arriving at their destination.

Related post: How to measure your network throughput

In order to have a high-performance service packets need to reach their destination successfully. If lots of packets are being lost in transit and therefore are unsuccessful, then the performance of the network will be poor. Monitoring network throughput is crucial for organizations looking to monitor the real-time performance of their network and successful packet delivery.

Most of the time network throughput is measured in bits per second (bps) but sometimes it is also measured in data packets per second. Network throughput is measured as an average figure used to represent the overall performance of the network. Measuring a low data throughput indicates problems like packet loss where packets are lost in transit (these can be devastating to VoIP audio calls where audio skips).

What is Bandwidth?

Bandwidth is a measure of how much data can be sent and received at a time. The higher the bandwidth a network has, the more data it can send back and forth. The term bandwidth isn’t used to measure speed but rather to measure capacity.

Bandwidth can be measured in bits per second (bps) megabits per second (Mbps) and gigabits per second (Gbps).

The key thing to remember about bandwidth is that having a high bandwidth doesn’t guarantee high network performance. If throughput in the network is being affected by network latency, packet loss, and jitter then your service will see delays even if you have a substantial amount of bandwidth available.

For more information, we have a guide that looks at the difference of throughput vs latency.

Bandwidth vs Throughput: Theoretical Packet Delivery and Actual Packet Delivery

On the surface bandwidth and throughput appear to be similar but they couldn’t be further apart in practice. The most common analogy used to describe the relationship between the two is to consider bandwidth as a pipe and throughput as water. The larger the pipe or bandwidth is, the more water or data can flow through it at one time.

Within a network, this means that the amount of bandwidth determines how many packets can be sent and received between devices at one time and the amount of throughput tells you how many packets are actually getting transmitted.

To put it another way, bandwidth provides you with a theoretical measure of the maximum number of packets that can be transferred and throughput tells you the number of packets that are actually being successfully transferred. As a result, throughput is more important than bandwidth as a measure of network performance.

Although throughput is the better term to measure network performance, this doesn’t mean that bandwidth doesn’t have any influence on performance. For instance, bandwidth has a significant influence on how fast a web page will load on a browser. So if you were looking to use web hosting for an application the amount of bandwidth you have available would impact the performance of certain services.

Bandwidth and Speed Aren’t the Same Thing

A common misconception is that bandwidth can be used as a measure of speed. We have discussed this briefly above but it is worth revisiting because of how commonly the two are mixed up. For instance, you’ll often see Internet Service Providers advertising high-speed services that are marketed as being due to the amount of maximum bandwidth you have available.

This makes for good marketing but it isn’t correct. If you increase the amount of bandwidth the only thing that changes is that more data can be sent at one time. Being able to send more data at once appears to make the network faster but it doesn’t change the actual speed at which the packets are traveling.

The truth is bandwidth is just one of a multitude of factors that tie into the speed of a network. Within a network, speed is a measure of response time. Factors like packet loss and latency impact speed.

Network Bandwidth and Network Latency

Bandwidth and latency are also discussed together regularly but each has its own unique meaning. We’ve already established that bandwidth is the capacity of the network or how much data can be transferred in a window of time. Latency is simply the amount of time that it takes for data to travel from a sender to its destination.

The relationship between the two is close, as bandwidth determines how much data can theoretically be sent and received at one time. However, latency determines how fast these packets actually reach their destination. Minimizing latency is important for keeping the network moving as fast as possible.

Monitoring Network Performance with Throughput (Including Latency and Packet Loss)

If you were looking to measure network performance it makes more sense to use network throughput rather than looking at capacity with bandwidth. Network administrators have a number of ways that they can use to measure for poor performance within an enterprise-grade network.

Using throughput to measure network performance is useful when troubleshooting because it helps administrators to pinpoint the root cause of a slow network. However, it is just one of the three factors that determine network performance. The other two are latency and packet loss:

- Latency – The term used for the amount of time taken for a packet to be transmitted from the source to its destination. Latency can be measured a number of ways such as round-trip time, or a one-way data transfer

- Packet loss – A term used to specify the number of packets lost in transit during a network transfer

Measuring these three together provides administrators with a much more complete perspective of the network’s performance.

See Also: How to Fix Packet Loss

Our methodology for selecting tools to measure throughput and bandwidth

We reviewed the market for bandwidth monitoring software and analyzed the options based on the following criteria:

- The ability to communicate with network devices through packet flow protocols, such as NetFlow and sFlow

- A detection facility to identify total bandwidth capacity

- A link-by-link and path analysis service

- A protocol analyzer for application traffic analysis

- Throughput displays both live and over time

- A free trial for assessment

- A comprehensive package of tools that is worth the asking price

Tools for Monitoring Network Bandwidth

Even though network bandwidth isn’t a measure of speed, monitoring bandwidth availability is important for making sure that your theoretical bandwidth is available in practice when you need it. Conducting bandwidth monitoring with a network monitoring tool allows you to see the actual amount of bandwidth available to your connected devices within the network.

1. Paessler PRTG Network Monitor (FREE TRIAL)

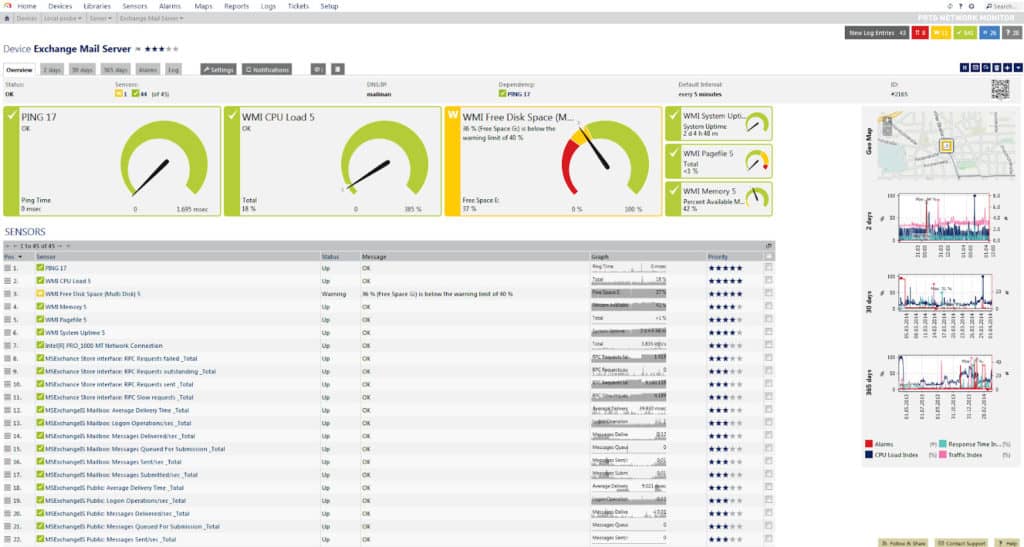

Another great tool for monitoring bandwidth usage is Paessler PRTG Network Monitor. PRTG Network Monitor allows you to autodiscover devices within your network and monitor their traffic usage. With PRTG Network Monitor you can monitor SNMP, NetFlow, and WMI traffic to keep tabs on bandwidth availability.

Key Features:

- Autodiscovery and inventory of devices

- Throughput data from SNMP

- QoS sensor

Why do we recommend it?

Paessler PRTG is a bundle of more than a thousand monitors that includes network testing systems such as Ping and TraceRoute. A series of sensors that implement packet sampling and traffic performance testing, such as NetFlow, provide insights into packet flows around the network.

PRTG Network Monitor also helps you to deal with bandwidth hogs so that you can see how much bandwidth is being consumed by individual devices and applications. This ensures that your network is optimized for every device and that you don’t have a few services slowing your connectivity down to a crawl.

Who is it recommended for?

Paessler PRTG is a very comprehensive package but you can spend less money by only accessing the sensors that you need instead of all of them. This makes the system accessible to businesses of all sizes. A free allowance of 100 sensors is appealing to small business owners.

Pros:

- Drag and drop editor makes it easy to build custom views and reports

- Offers templates for monitoring metrics like bandwidth, throughput, and latency

- Supports a wide range of alert mediums such as SMS, email, and third-party integrations into platforms like Slack/Jira

- Supports a freeware version (up to 100 sensors)

Cons:

- PRTG is a very comprehensive platform with many features and moving parts that require time to learn

Paessler offers unlimited use of PRTG for the use of up to 100 sensors. You can access a 30-day free trial to figure out what your network requirements are.

EDITOR'S CHOICE

Paessler PRTG Network Monitor is our top pick for a bandwidth monitoring tool because it offers a comprehensive, easy-to-use solution for monitoring network traffic and ensuring optimal performance across all network devices. Its attractive interface features charts and dials and can be customized. PRTG enables network administrators to quickly set up and begin monitoring their entire network infrastructure, including bandwidth usage, network health, and traffic patterns, without a steep learning curve. One of the most important features of PRTG is its ability to monitor bandwidth usage in real-time and provide detailed insights into network performance. The tool offers a wide range of sensors, including SNMP, NetFlow, sFlow, and IPFIX, to capture traffic data from routers, switches, and other devices. This flexibility allows for a thorough analysis of traffic and bandwidth consumption at various points within the network. PRTG makes it easy to track bandwidth utilization and identify potential bottlenecks or areas of concern. The tool provides real-time alerts for performance issues, enabling IT teams to take immediate action before network slowdowns or failures occur. Additionally, Paessler offers scalability, allowing the tool to grow alongside your network needs whether you’re monitoring a small office network or a large, complex enterprise environment. This is a flexible system where the buyer chooses which sensors to activate. Those who only use 100 sensors never have to pay for the system.

Download: Get a 30-day free trial

Official Site: https://www.paessler.com/bandwidth-monitoring-tool

OS: Windows Server or SaaS

2. Site24x7 Network Traffic Monitoring (FREE TRIAL)

Site24x7 Network Traffic Monitoring is the best option available for companies that want to use SaaS packages for their system monitoring services. This tool installs an agent on the network to gather data and works with flow protocols to identify traffic anomalies per link.

Key Features:

- Extracts data from switches

- Records link capacity

- Identifies capacity utilization

Why do we recommend it?

Site24x7 Network Traffic Monitoring sets itself up through a network discovery routine and then queries all discovered network devices for traffic data. Throughput information is compared to full capacity per interface so the tool can spot when one link is nearing its full capacity. This raises an alert, notifying technicians so they can take action and head off disaster.

The Site24x7 system communicates with switches and routers using flow protocols. As different manufacturers load their devices with different protocols, the Network Traffic Monitoring system needs all of them in order to collect data from all devices no matter the brand. The tool can use the NetFlow, IPFIX, sFlow, J-Flow, cFlow, AppFlow, and NetStream protocols. Data is uploaded to the server for assessment against alert thresholds and it is also stored for capacity planning analysis.

Who is it recommended for?

This package is sold in plans that also include network device status tracking and server and application monitoring. Base plans are sized and priced to suit small businesses and larger businesses pay extra for capacity expansions. Therefore, the package is suitable for any size or type of business and there is also a plan for managed service providers.

Pros:

- Automatic network scanning to create a network inventory

- System monitoring automation with performance thresholds and alerts

- Flow protocols that extract traffic throughput data from switches and routers

- Stores statistics for historical analysis

- Included in plans that provide full-stack observability

Cons:

- No on-premises version

Site24x7 is a cloud-based system that has many monitoring modules that cover different technologies. The Network Traffic Monitoring service is just one of the units on the platform that also offers network device configuration management and log collection. Examine the Site24x7 platform with a 30-day free trial.

Also, the free SolarWinds Flow Tool Bundle is a useful addition to your analysis armory. The pack includes an interface that helps you to configure your Cisco routers to send NetFlow data to your collector. Two more utilities enable you to circulate traffic data around the network for testing purposes and also generate traffic to examine the performance of your equipment and network services in the face of extra demand.

Tools for Monitoring Throughput and Performance

3. SolarWinds Network Bandwidth Analyzer Pack

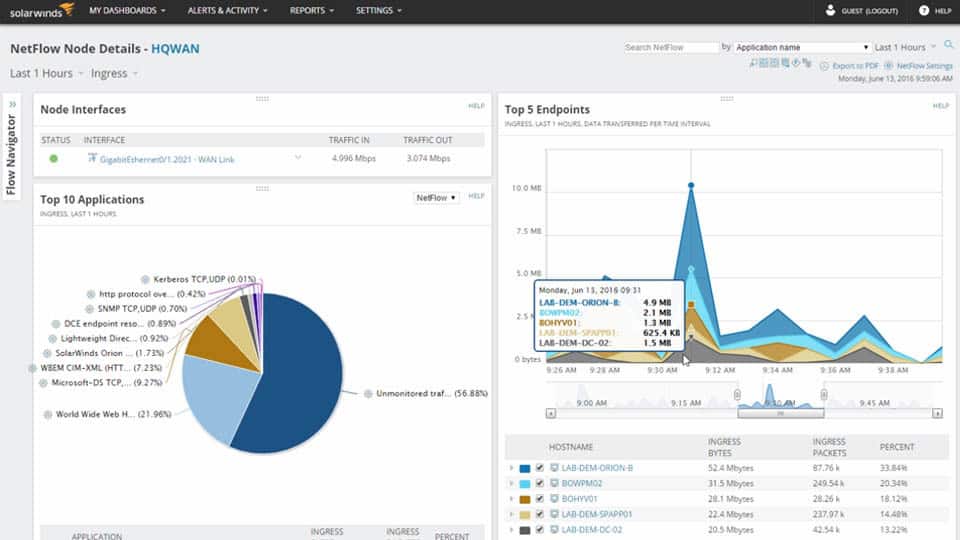

SolarWinds Network Bandwidth Analyzer Pack is a product that can accurately measure the throughput of your network.

Key Features:

- Device discovery and logging

- Tracks the statuses of network devices

- Selects traffic data

- Alerts for performance problems

- Includes the NetFlow Traffic Analyzer

Why do we recommend it?

The SolarWinds Network Bandwidth Analyzer Pack is a package of monitoring tools that gives you a network device monitor and a traffic analyzer. The traffic monitoring services in this bundle identify the full capacity of all parts of the network and also shows the current data traffic volumes on each device and link. A path visualization service, called NetPath lets you see traffic on all of the links from one point on the network to another.

You can view throughput flow data alongside monitoring bandwidth with SNMP. There is also a network throughput test that can be mixed with pre and post-QoS policy maps to show if your QoS policy is improving the performance of the network over time.

Who is it recommended for?

This is a big package and it is suitable for the managers of large networks. Although the tools are useful for networks of all sizes, the complexities of all of the paths that are built into large LANs need a high-end tool like this.

Pros:

- Designed to help administrators measure and optimize their throughput

- Highly customizable reports, dashboards, and monitoring tools

- Uses simple QoS rules for quick traffic shaping

- Built with large networks in mind, can scale to 50,000 flows

- Available for both Linux and Windows

Cons:

- Is a highly specialized suite of tools designed for network professionals, not designed for non-technical users

See also: What is QOS

How to Optimize Network Bandwidth

Though bandwidth isn’t the same thing as speed, having poorly-optimized network bandwidth can have a negative impact on the performance of the network and produce a subpar user experience on many applications. In this section we’re going to look at how you can make sure that your bandwidth is optimized:

- Use QoS Settings

- Use Cloud-based Applications

- Eliminate Non-Essential Traffic

- Do Backups and Updates Outside Peak Hours

Use QoS Settings

Organizations commonly implement QoS or Quality of Service settings to help the network to support mission-critical applications. With QoS settings, you can set network traffic policies to prioritize certain types of traffic so that high-maintenance applications have all the bandwidth they need to perform well.

For example, if you were running a VoIP phone system you could set QoS settings to prioritize voice traffic. By prioritizing voice traffic you would make sure that end users have the best user experience possible as voice packets would take priority over less important forms of traffic.

Use Cloud-based Applications

Sometimes the easiest way to improve network optimization is to deploy applications in the cloud. By using public and private clouds you offload the pressure of maintaining that traffic within your own network. By letting a third-party company deal with the performance of these applications you reduce your monitoring burdens and can also increase the performance of your regularly-used applications.

Eliminate Non-Essential Traffic

Even in the most productive environments, it can be surprising how much non-essential traffic crops up on networks. It is not uncommon to find employees browsing on YouTube or streaming films on Netflix! Tightening up your internal policies to block this traffic can help to make sure that bandwidth isn’t being wasted on applications irrelevant to daily operations.

Do Backups and Updates Outside of Peak Hours

There are many instances where organizations put a strain on bandwidth by undergoing massive network backups and updates in the middle of the day. These software updates can have a substantial impact on the bandwidth availability of the network. This results in real-time latency and poor performance for users.

Scheduling backups and software patches outside of working hours or peak hours will help to minimize the impact that these changes will have on the network. Doing these necessary changes outside of normal working hours will make sure that the network is up and running for everyone.

See also: Best Free Bandwidth Monitoring Software and Tools

How to Optimize Network Throughput

Just like network bandwidth, data throughput can also be optimized. The key to optimizing your network throughput is to minimize latency. The more latency there is the lower the throughput. Low throughput delivers poor performance for end-users. There are many different ways to minimize latency and we’re going to look at some of the simplest ways here.

Monitor Endpoint Usage

The most common cause of latency is other users on the network. If employees are using traffic-intensive tools or applications then the performance of the network can slow dramatically, particularly if the individual is conducting downloads. Monitoring endpoint usage can allow you to find when employees are causing latency with applications that may or may not be related to work.

Using a network monitoring tool like Paessler PRTG Network Monitor or SolarWinds Network Performance Monitor can show you those devices that are eating up the available data. Once you’ve done so you can take action to get rid of them completely.

Find and Address Network Bottlenecks

Network bottlenecks are one of the main culprits behind networks with high latency. Network bottlenecks are where traffic becomes congested and slows the performance of the network. These bottlenecks can occur throughout the day depending on when traffic is most congested. In larger organizations, this tends to be after lunch when employees return to work, but it can be anytime the network is operational.

Addressing network bottlenecks can be done a number of ways starting with upgrading a router or switch to keep up with the traffic levels. Another way is to reduce the overall number of nodes (this will shorten the amount of distance that packets have to travel and reduce congestion).

See also: Best Tools To Monitor Throughput

Throughput vs Bandwidth: Closing Words

Throughput and bandwidth are two different concepts but they still have a close relationship with each other. Paying attention to both will help to make sure that your network holds the best performance possible. Remember that bandwidth is the pipe of theoretical transfer capacity whereas throughput is the water that tells you the rate of successful packet delivery.

Monitoring bandwidth usage and throughput together will provide you with the most comprehensive view of your network performance. Combining the two can allow you to make sure that your allocated bandwidth is being used optimally and allows you to confront performance issues like packet loss head on.

Throughput vs Bandwidth & Related FAQs

What is the difference between delay and latency?

Delay and latency are very similar terms and almost interchangeable. A delay refers to what prevents a packet from arriving quickly, so it refers to a slowdown in front of the packet and is measured as the time it takes for the first bit of the packet to get to the destination. Latency refers to the time it takes for the whole packet to get to the destination.

What is the difference between throughput and goodput?

Throughput refers to all of the traffic that crosses a network. Goodput only measures the packets that actually carry data. For example, a TCP connection requires session establishment exchanges and TLS-protected transfers require even more pre-amble traffic. That is included in throughput calculations but not goodput. Retransmissions are also included in throughput but not goodput.

What is the difference between throughput and bit rate?

The bit rate is the number of bits that are transmitted per second. Throughput is the achieved useful data transfer bit rate. The only difference between the two measurements is that throughput excludes data-link layer overhead.

Why does throughput of data diminish so much with distance?

Typically, networks maximize value for money by creating common links that channel data to and from several endpoints or connecting links through switches and routers. The further a signal has to travel, the more network devices it has to pass through, each device introduces a slight delay because it has to copy data arriving in on one port over to an outgoing port. So, the more links that need to be crossed, the longer it will take the transmission.

Long-distance wires that don’t have to serve branch connections also face speed problems. Data travels over a wire as an electronic pulse. Although an electric charge possesses all of the wire instantaneously, a longer wire is more susceptible to environmental interference because it has a larger surface area and there is more surrounding space filled with conflicting noise. As a result, there is a limit to the distance that a transmission medium can usefully carry a signal. The distance is longer depending on the quality of the medium. Long stretches require repeaters, which remove noise and boost the signal. A repeater introduces delay, so a long distance transmission passes through more repeaters, reducing throughput.

Related: Network capacity planning tutorial